Earlier this week I did a live stream on the upcoming Wolverine 5.0 release where I just lightly touched on the concept for our planned SignalR integration with Wolverine. While there wasn’t that much to show yesterday, a big pull request just landed and I think the APIs and the approach has gelled enough that it’s worth a sneak peak.

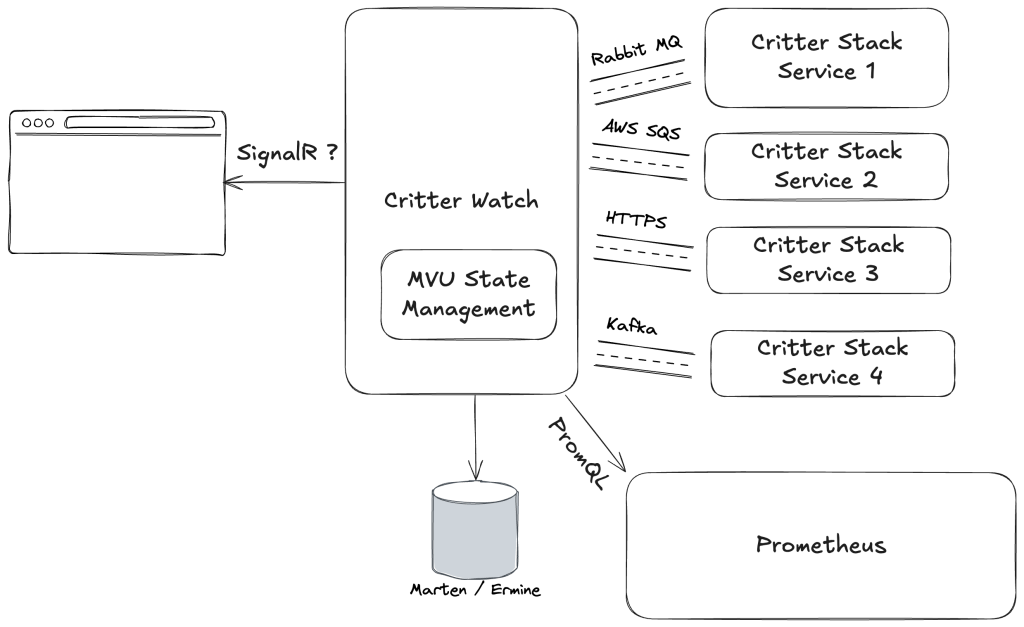

First though, the new SignalR transport in Wolverine is being built now to support our planned “CritterWatch” tool shown below:

As it’s planned out right now, the “CritterWatch” server application communicating via SignalR to constantly push updated information to any open browser dashboards about system performance. On the other side of things, CritterWatch users will be able to submit quite a number of commands or queries from the browser to CritterWatch, when will then have to relay commands and queries to the various “Critter Stack” applications being monitored through asynchronous messaging. And of course, we expect the responses or status updates to be constantly flowing from the monitored services to CritterWatch which will then relay information or updates to the browsers, again by SignalR.

Long story short, there’s going to be a lot of asynchronous messaging back and forth between the three logical applications above, and this is where a new SignalR transport for Wolverine comes into play. Having the SignalR transport gives us a standardized way to send a number of different logical messages from the browser to the server and take advantage of everything that the normal Wolverine execution pipeline gives us, including relatively clean handler code compared to other messaging or “mediator” tools, baked in observability and traceability, and Wolverine’s error resiliency. Going back the other way, the SignalR transport gives us a standardized way to publish information right back to the client from our server.

Enough of that, let’s jump into some code. From the integration testing code, let’s say we’ve got a small web app configured like this:

var builder = WebApplication.CreateBuilder();

builder.WebHost.ConfigureKestrel(opts =>

{

opts.ListenLocalhost(Port);

});

// Note to self: take care of this in the call

// to UseSignalR() below

builder.Services.AddSignalR();

builder.Host.UseWolverine(opts =>

{

opts.ServiceName = "Server";

// Hooking up the SignalR messaging transport

// in Wolverine

opts.UseSignalR();

// These are just some messages I was using

// to do end to end testing

opts.PublishMessage<FromFirst>().ToSignalR();

opts.PublishMessage<FromSecond>().ToSignalR();

opts.PublishMessage<Information>().ToSignalR();

});

var app = builder.Build();

// Syntactic sure, really just doing:

// app.MapHub<WolverineHub>("/messages");

app.MapWolverineSignalRHub();

await app.StartAsync();

// Remember this, because I'm going to use it in test code

// later

theWebApp = app;

With that configuration, when you call IMessageBus.PublishAsync(new Information("here's something you should know")); in your system, Wolverine will be routing that message through SignalR, where it will be received in a client with the default “ReceiveMessage” operation. The JSON delivered to the client will be wrapped with the CloudEvents specification like this:

Likewise, Wolverine will expect messages posted to the server from the browser to be embedded in that lightweight CloudEvents compliant wrapper.

We are not coincidentally adding CloudEvents support for extended interoperability in Wolverine 5.0 as well.

For testing, the new WolverineFx.SignalR Nuget will also have a separate messaging transport using the SignalR Client just to facilitate testing, and you can see that usage in some of the testing code:

// This starts up a new host to act as a client to the SignalR

// server for testing

public async Task<IHost> StartClientHost(string serviceName = "Client")

{

var host = await Host.CreateDefaultBuilder()

.UseWolverine(opts =>

{

opts.ServiceName = serviceName;

// Just pointing at the port where Kestrel is

// hosting our server app that is running

// SignalR

opts.UseClientToSignalR(Port);

opts.PublishMessage<ToFirst>().ToSignalRWithClient(Port);

opts.PublishMessage<RequiresResponse>().ToSignalRWithClient(Port);

opts.Publish(x =>

{

x.MessagesImplementing<WebSocketMessage>();

x.ToSignalRWithClient(Port);

});

}).StartAsync();

_clientHosts.Add(host);

return host;

}

And now to show a little Wolverine-esque spin, let’s say that you have a handler being invoked by a browser sending a message through SignalR to a Wolverine server application, and as part of that handler, you need to send a response message right back to the original calling SignalR connection to the right browser instance.

Conveniently enough, you have this helper to do exactly that in a pretty declarative way:

public static ResponseToCallingWebSocket<WebSocketResponse> Handle(RequiresResponse msg)

=> new WebSocketResponse(msg.Name).RespondToCallingWebSocket();

And just for fun, here’s the test that proves the above code works:

[Fact]

public async Task send_to_the_originating_connection()

{

var green = await StartClientHost("green");

var red = await StartClientHost("red");

var blue = await StartClientHost("blue");

var tracked = await red.TrackActivity()

.IncludeExternalTransports()

.AlsoTrack(theWebApp)

.SendMessageAndWaitAsync(new RequiresResponse("Leo Chenal"));

var record = tracked.Executed.SingleRecord<WebSocketResponse>();

// Verify that the response went to the original calling client

record.ServiceName.ShouldBe("red");

record.Message.ShouldBeOfType<WebSocketResponse>().Name.ShouldBe("Leo Chenal");

}

And for one least trick, let’s say you want to work with grouped connections in SignalR so you can send messages to a subset of connected clients. In this case, I went down the Wolverine “Side Effect” route, as you can see in these example handlers:

// Declaring that you need the connection that originated

// this message to be added to the named SignalR client group

public static AddConnectionToGroup Handle(EnrollMe msg)

=> new(msg.GroupName);

// Declaring that you need the connection that originated this

// message to be removed from the named SignalR client group

public static RemoveConnectionToGroup Handle(KickMeOut msg)

=> new(msg.GroupName);

// The message wrapper here sends the raw message to

// the named SignalR client group

public static object Handle(BroadCastToGroup msg)

=> new Information(msg.Message)

.ToWebSocketGroup(msg.GroupName);

I should say that all of the code samples are taken from our test coverage. At this point the next step is to pull this into our CritterWatch codebase to prove out the functionality. The first thing up with that is building out the server side of what will be CritterWatch’s “Dead Letter Queue Console” for viewing, querying, and managing the DLQ records for any of the Wolverine applications being monitored by CritterWatch.

For more context, here’s the live stream on Wolverine 5: