To the best of my recollection and internet sleuthing today, development on Marten started in October of 2015 after my then colleague Corey Kaylor had kicked around an idea the previous summer to utilize the new JSONB feature in PostgreSQL 9.4 as a way to replace our then problematic usage of a third party NoSQL database in a production application (RavenDb, but some of that was on us (me) and RavenDb was young at the time). Digging around today, I found the first post I wrote when we first announced a new tool called Marten later that month.

At this point I feel pretty confident in saying that Marten is the leading Event Sourcing tool for the .NET platform. It’s definitely the most capable toolset for Event Sourcing you can use in .NET and arguably the only single truly “batteries included” option* — especially if you consider its combination with Wolverine into the “Critter Stack.” On top of that, it still fulfills its intended original role as a robust and easy to use document database with a much better local development story and transactional model than most NoSQL options that tend to be either cloud only or have weaker support for data consistency than Marten’s PostgreSQL foundation.

If you’ll indulge just a little bit of navel gazing today, I’d like to walk back through some of the notable history of Marten and thank some fellow travelers along the way. As I mentioned before, Corey Kaylor was the project cofounder and “Marten as a Document Database” was really his original idea. Oskar Dudycz was a massive contributor and really co-leader of Marten for many years, especially around Marten’s now focus on Event Sourcing (You can follow his current work with Event Sourcing and PostgreSQL on Node.JS with Emmett). Babu Annamalai has been a core team member of Marten for most of its life and has done yeoman work around our DevOps infrastructure and website as well as making large contributions to the code. Jaedyn Tonee has been one of our most active community members and now a core team member and contributor. Anne Erdtsieck adds some younger blood, enthusiasm, and a lot of helpful documentation. Jeffry Gonzalez is helping me a great deal with community efforts and now the CritterWatch tooling.

Beyond that, Marten has benefitted from far, far more community involvement than any other OSS project I’ve ever been a part of. I think we’re sitting at around ~250 official contributors to the codebase (a massive number for a .NET OSS project), but that undercounts the true community when you also account for everybody who has made suggestions, given feedback, or taken the time to create actionable GitHub issues that have led to improvements in Marten.

More recently, JasperFx Software‘s engagements with our customers using Marten has directly led to a very large number of technical improvements like partitioning support, first class subscriptions, multi-tenancy improvements, and quite a bit of the integration with Wolverine for scalability and first class messaging support.

Some Project History

When I started the initial PoC work on what is now Marten in late 2015, I was just getting over my funk from a previous multi-year OSS effort failing and furiously doing conceptual planning for a new application framework codenamed “Jasper” that was going to learn from everything that I thought went wrong with FubuMVC (“Jasper” was later rebooted as “Wolverine” to fit into the “Critter Stack” naming theme and also to act as a natural complement to Marten).

To tell this story one last time, as I was doing the initial work I was using the codename “Jasper.Data.” Corey called me one day and in his laconic manner asked me what codename I was going to use and even said “not something lame like Jasper.Data.” I said um, no, and remembering the story of how Selenium is the “cure for mercury poisoning” naming I quickly googled for the “natural predators of Ravens,” which is how we stumbled on the name “Marten” from that moment on as our planned drop in replacement for RavenDb.

As I said earlier, I was really smarting from the FubuMVC project failure, and a big part of my own lessons learned was that I should have been much more aggressive in projection promotion and community building from the very beginning instead of just being a mad scientist. It turned out that there were at least a couple other efforts out there to build something like Marten, but I still had some leftover name recognition from the CodeBetter and ALT.NET days (don’t bother looking for that, it’s all long gone now) and Marten won out quickly over those other nascent projects and even attracted an important cadre of early, active contributors.

Our 1.0 release was in mid 2016 just in time for Marten to go into production in an application with heavy traffic that fall.

A couple years previous I had spent about a month doing some proof of concept work on a possible PostgreSQL backed event store on NodeJS, so I had some interest in Event Sourcing as a possible feature set and tossed in a small event store feature set off to the side of the Marten 1.0 release that was mostly about the Document Database feature set. To be honest, I was just irritated at the wasted effort from the earlier NodeJS work that was abandoned and didn’t want it to be a complete loss. I had zero idea at that time that the Event Sourcing feature set in what I thought was going to be a little side project mostly for work was going to turn out to be the most important and positively impactful technical effort of my career.

As it turned out, we abandoned our plans at that time to jump from .NET to NodeJS when the left-pad incident literally happened the exact same day we were going to meet one last time to decide if we really wanted to do that (we, as it turned out, did not want to do that). At the same time, David Fowler and co in the AspNetCore team finally started talking about “Project K” that while cut down, did become what we now know as .NET Core and in my opinion — even thought that team drives me bonkers sometimes — saved .NET as a technical platform and gave .NET a much brighter future.

Marten 2.0 came out in 2017 with performance improvements, our first built in multi-tenancy feature set, and some customization of JSON serialization for the first time.

Marten 3.0 released in late 2018 with the incorporation of our first “official” core team. The release itself wasn’t that big of a deal, but the formation of an actual core team paid huge dividends for the project over time.

Marten went quiet for awhile as I left the company who had originally sponsored Marten development, but the community and I released the then mammoth Marten 4.0 release in late 2021 that I hoped at the time would permanently fix every possible bit of the technical foundation and set us up for endless success. Schema management, LINQ internals, multi-tenancy, low level mechanics, and a nearly complete overhaul of the Event Sourcing support were part of that release. At that point it was already clear that Marten was now an Event Sourcing tool that also had a Document Database feature set instead of vice versa.

Narrator voice: V4 was not the end of development and did not fix every possible bit of the Marten technical foundation.

Marten 5.0 followed just 6 months later to fix some usability issues we’d introduced in 4.0 with our first foray into standardized AddMarten() bootstrapping and .NET IHost integration. Also importantly, 5.0 introduced Marten’s support for multi-tenancy through separate databases in addition to our previous “conjoined” tenancy model.

Marten 6.0 landed in May 2023 right as I was just about to launch JasperFx. Oskar added the very important event upcaster feature. I might be misremembering, but I think this is about when we added full text search to Marten as well.

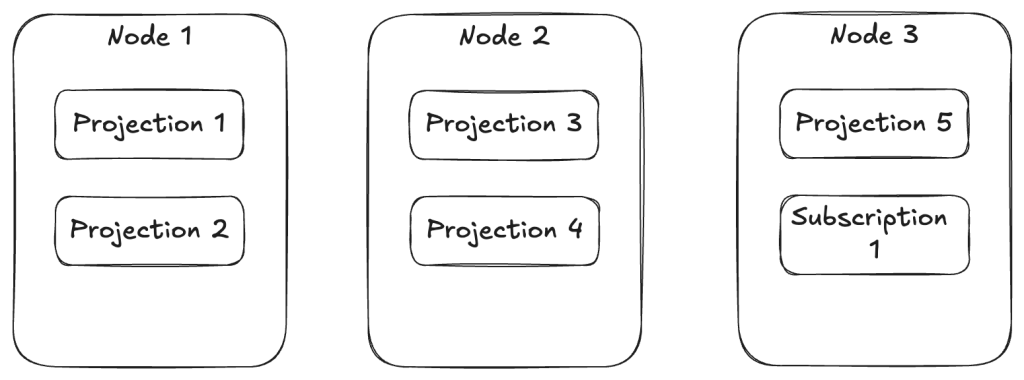

Marten 7.0 was released in March of last year, and represented the single largest feature release I think we’d ever done. In this release we did a near rewrite of the LINQ support and extended its use cases while in some cases dramatically improving query performance. The very lowest level database execution pipeline was greatly improved by introducing Polly for resiliency and using every possible advanced trick in Npgsql for improving query batching or command execution. The important async daemon got some serious improvements to how it could distribute work across an application cluster, with that being even more effective when combined with Wolverine for load distribution. Babu added a new native PostgreSQL “partial update” feature we’d wanted for years as the PLV8 engine had fallen out of favor. Heck, 7.0 even added a new model for dynamically adding new tenant databases at runtime with no downtime and a true blue/green deployment model for versioned projections as part of the Event Sourcing feature set. JT added PostgreSQL read replica support that’s completely baked into Marten.

Feel free to correct me if I’m wrong, but I don’t believe there is another event sourcing tool on the planet that can match the CritterStack’s ability to do blue/green deployments with active event projections while not sacrificing strong data consistency.

There was an absurd amount of feature development during 2024 and early 2025 that included:

- PostgreSQL partitioning support for scalability and performance

- Full Open Telemetry and Metrics support throughout Marten

- The “Quick Append” option for faster event store operations

- A “side effect” model within projections that folks had wanted for years

- Convenience mechanisms to make event archiving easier

- New mechanisms to manage tenant data at runtime

- Non-stale querying of asynchronously projected event data

- The

FetchLatest()API for optimized fetching or advancement of single stream projections. This was very important to optimize common CQRS command handler usages - And a lot more…

Marten 8.0 released this June, and I’ll admit that it mostly involved restructuring the shared dependencies underneath both Marten and Wolverine. There was also a large effort to yank quite a bit of the event store functionality and key abstractions out to a shared library that will theoretically be used in a future critter tool to do SQL Server backed event sourcing.

And about that…

Why not SQL Server?!?

If Marten is 10 years old, then that means it’s been 10 years of receiving well (and sometimes not) intentioned advice that Marten should have been either built on SQL Server instead of PostgreSQL or that we should have sprinkled abstractions every which way so that we or community contributors would be able to just casually override a pluggable interface to swap PostgreSQL out for SQL Server or Oracle or whatever. \

Here’s the way I see this after all these years:

- The PostgreSQL feature set for JSON is still far ahead of where SQL Server is, and Marten depends on a lot of that special PostgreSQL sauce. Maybe the new SQL Server JSON Type will change that equation, but…

- I’ve already invested far more time than I think I should have getting ready to build a planned SQL Server backed port of Marten and I’m not convinced that that effort will end up being worth the sunk cost 😦

- The “just use abstractions” armchair architecting isn’t really viable, and I think that would have exploded the internal complexity of several Marten subsystems. And honestly, I was adamant that we were going YAGNI on Marten extensibility upfront so we’d actually get something built after having gone to the opposite extreme with a prior OSS effort

- PostgreSQL is gaining traction fast in the .NET community and it’s actually much rarer now to get pushback from potential users on PostgreSQL usage — even in the normally very Microsoft-centric .NET world

Marten’s Future

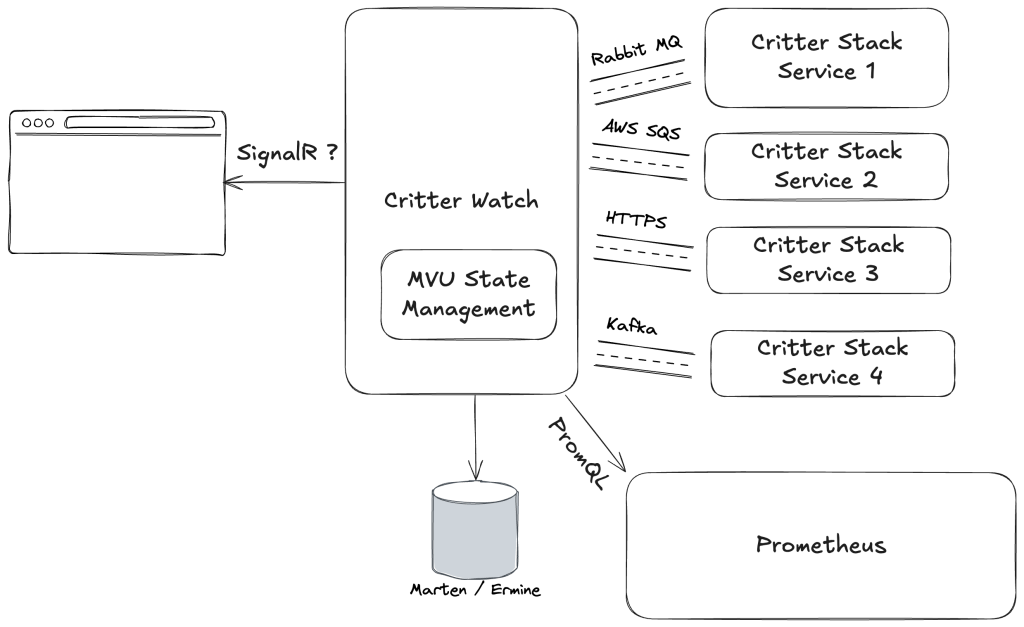

Other than possible performance optimizations, I think that Marten itself will slow down quite a bit in terms of feature development in the near future. That changes anytime a JasperFx client of course, but for the most part, I think most of the Critter Stack effort for the remainder of the year goes into the in flight “CritterWatch” tool that will be a management and observability console application for Critter Stack systems in production.

Summary

I can’t say that back in 2015 I had any clue that Marten would end up being so important to my career. I will say that when I was interviewing with Calavista in 2018 I did a presentation on early Marten as part of that process that most certainly helped me get that position. At the time, my soon to be colleague interviewing me asked me what professional effort I was most proud of, and I answered “Marten” even then.

I had long wanted to branch out and start a company around my OSS efforts, but had largely given up on that dream until someone I just barely know from conferences reached out to me to ask why in the world we hadn’t already commercialized Marten because he thought it was a better choice even then the leading commercial tool. That little DM exchange — along with endless encouragement and support from my wife of course — gave me a bit of confidence and a jolt to get going. Knowing that Marten needed some integration into messaging and a better story for CQRS within an application, Wolverine came back to life originally as a purposeful complement to Marten, which led to our now “Critter Stack” that is the only real end to end technical stack for Event Sourcing in the .NET ecosystem.

Anyway, the whole morale of this little story is that the most profound effort of my now long technical career was largely an accident and only possible with a helluva lot of help, support, and feedback from other people. From my side, I’d say that the one single personal strength that does set me apart from most developers and directly contributed to Marten’s success is simply having a much longer attention span than most of my peers:). Make of *that* what you will.

* Yes, you can use the commercial KurrentDb library within a .NET application, but that only provides a small subset of Marten’s capabilities and requires a lot more repetitive code to use than Marten does.