Let’s start by making the statement that automating end to end, full stack tests against a non trivial web application with any degree of asynchronous behavior is just flat out hard. My shop has probably over done it with black box, end to end tests using Selenium in the past and it’s partially given automated testing a bad name to the point where many teams are hesitant to try it. As a reaction to those experiences, we’re trying to convince our teams to rebalance our testing efforts away from writing so many expensive, end to end tests and unit tests that overuse mock objects to writing far more intermediate level integration tests that provide a much better effort to reward ratio.

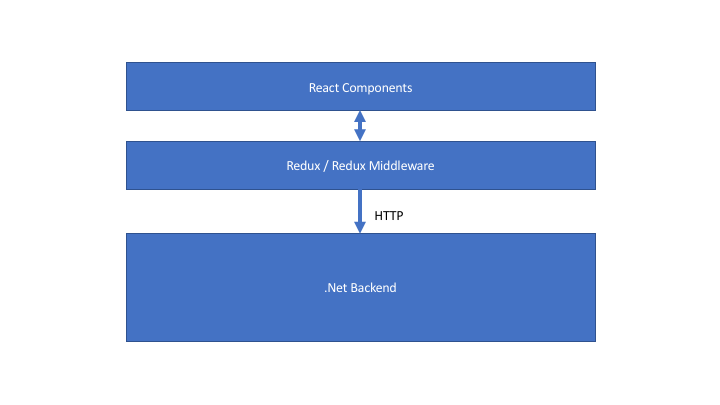

As part of that effort, consider our theoretical, preferred technical stack for new web application development consisting of a React/Redux front end, an ASP.Net Core application running on the web server, and some kind of database. The typical Microsoft layered architecture (minus the obligatory cylinder for the database that I just forgot to add) would look like this:

Now, let’s talk about how we would want to devise our automated testing strategy for this technical stack (our test pyramid, if you will). First though, let me state some of my philosophy around automated testing:

- I think that the primary purpose of automated testing is to try to find and remove problems in your code rather than try to prove that the system works perfectly. That’s actually an important argument because it’s a prerequisite for accepting white box testing — which frequently tends to be a much more efficient approach — as a valid approach compared to only accepting end to end, black box tests.

- The secondary purpose of automated tests is to act as a regression test cycle that makes it “safe” for a team to continuously evolve or extend the codebase. That usage as a regression cycle is highly dependent upon the automated tests being fast, reliable, and not too brittle when the system is changed. The big bang, end to end Selenium based tests tend to fall down on all three of those criteria.

- In most cases, you want to try to pick the testing approach that gives you the fastest feedback cycle while still telling you something useful

Here’s more on what I think makes for a successful test automation strategy.

Now, to make that more concrete in regards to our technical stack shown above, I’d recommend:

- Writing unit tests directly against the React components using something like Enzyme where that’s valuable. My personal approach is to make most of my React components pretty dumb and hopefully just be pure function components where you might not worry about tests, but I think that’s a case by case decision. As an aside, I think that React is easily the most testable user interface tooling I’ve ever used and maybe the first one I’ve ever used that took testability so seriously.

- Write unit tests with Mocha or Jest directly against the Redux reducers. This removes problems in the user interface state logic.

- Since there is coupling between your Redux store and the React components, I would strongly suggest some level of integration testing between the Redux tore and the React components, especially if you depend on transformations within the react-redux wiring. I thought I got quite a bit of value out of doing that in my Storyteller user interface, and it should be even better swapping out in-browser testing with Karma in favor of using Enzyme for the React components.

- Continue to write unit tests with xUnit tests against elements of the .Net code wherever that makes sense, with the caveat being that if you find yourself writing tests with mock objects that seem to be just duplicating the implementation of the real code, it’s time to switch to an integration test. Here’s my thoughts and guidance for staying out of trouble with mock objects. Some day, I’d like to go through and rewrite my old CodeBetter-era posts on testability design, but that’s not happening any time soon.

- Intermediate level integration testing against HTTP endpoints using Alba (or something similar), or testing message handling, or even integration testing of a service within the application using its dependencies. I’m assuming the usage of any kind of backing database within these tests. If these tests involve a lot of data setup, I’d personally recommend switching from xUnit to Storyteller where it’s easier to deal with test data state and the test lifecycle. The key here is to remove problems that occur between the .Net code and the backing database in a much faster way than you could ever possibly do with end to end, Selenium-based tests.

- Write a modicum of black box, end to end tests with Selenium just to try to find and prevent integration errors between the entire stack. The key here isn’t to eliminate these kinds of tests altogether, but rather to rebalance our efforts toward more efficient mechanisms wherever we can.

The big thing that’s missing in that bullet list above is some kind of testing that can weed out problems that arise in the interaction and integration of the React/Redux front end and the .Net middle tier + the backing database. Tomorrow I’ll drop a follow up blog post with an experimental approach to using Storyteller to author data centric, subcutaneous tests from the Redux store layer down through the backing database as an alternative to writing so many functional tests with Selenium driving the front end.

A Tangential Rant about Selenium

First off, I have nothing against Selenium itself and other than the early diamond dependency hell before it ilmerged Newtonsoft.Json (it’s always Newtonsoft’s fault) and various browser updates breaking it. I’ve had very few issues with the Selenium library by itself. That being said, every so often I’ll have an interaction with a tester at work who thinks that automated testing begins and ends with writing Selenium scripts against a running application that drives me up the wall. Like clockwork, I’ll spend some energy trying to talk about issues like data set up, using a testing DSL tool like Cucumber or my own Storyteller to make the specs more readable, and worrying about how to keep the tests from being too brittle and they’ll generally ignore all of that because the Selenium tutorials make it seem so easy.

The typical Selenium tutorial tends to be simplistic and gives newbies a false sense about how difficult automated testing is and what it involves. Working through a tutorial on Selenium that requires you to control some kind of contact form, then post it to the server and check the values on the next page without any kind of asynchronous behavior and then saying that you’re ready to do test automation against real systems is like saying you know how to play chess because you know how the horse-y guy moves on the board.

One thought on “Automated Test Pyramid in our Typical Development Stack”