That’s Winterfell from a Game of Thrones if you were curious. I have no earthly idea whether or not this mini-series of posts will be the slightest bit useful for anyone else, but it’s probably been good for me to read up much more on what other people think and to just flat out ponder this a lot more as it’s suddenly relevant to several different current JasperFx Software clients.

In my last post Thoughts on “Modular Monoliths” I was starting write a massive blog post about my reservations about modular monoliths and where and how the “Critter Stack” tools (Marten and Wolverine) are or aren’t already well suited for modular monolith projection construction — but I really only got around to talking about the problems with both traditional monolith structures and micro-service architectures before running out of steam. This post is to finally lay out my thoughts on “modular monoliths” with yet another 3rd post coming later to get into some specifics about how the “Critter Stack” tools (Marten and Wolverine) fit into this architectural style. I do think even the specifics of the Critter Stack tooling will help illuminate some potential challenges for folks building modular monolith systems with completely different tooling.

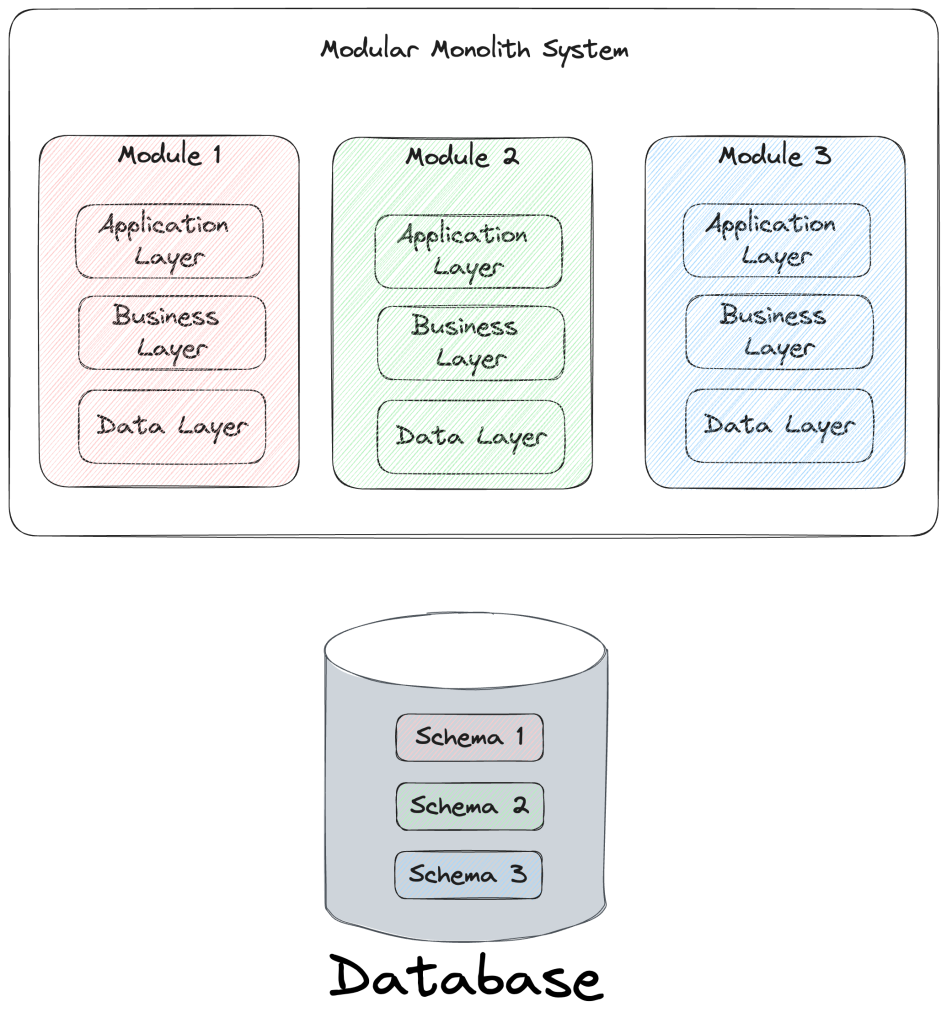

So let’s get started by me saying that my conceptual understanding of a “modular monolith” is that it’s a single codebase like we’ve traditionally done and consistently failed at, but this time we’ll pay more attention to isolating logical modules of functionality within that single codebase:

As I said in the earlier post, I’m very dubious about how effective the modular monolith strategy will really be for our industry because I think it’s still going to run into some very real friction. Do keep in mind that I’m coming from a primarily .NET background, and that means there’s no easy way to run multiple versions of the same library in the same process. That being said, here’s what else I’m still worried about:

- You may still suffer from the same kind of friction with slow builds and sluggish IDEs you almost inevitably get from large codebases if you have to work with the whole codebase at one time — but maybe that’s not actually a necessity all the time, so let’s keep talking!

- I really think it’s important for the long term health of a big system to be able to do technical upgrades or switch out technologies incrementally instead of having to upgrade the whole codebase at one time because you can never, ever (or at least very rarely) convince a non-technical product owner to let you stop and take six months to upgrade technologies without adding any new features — nor honestly should you even try to do that without some very good reasons

- It’s still just as hard to find the right boundaries between modules as it was to make the proper boundaries for truly independent micro-services

A massive pet peeve of mine is hearing people exclaim something to the effect of “just use Domain Driven Design and your service and/or module boundaries will always be right!” I think the word “just” is doing a lot of work there and anybody who says that should read the classic essay Lullaby Language.

After writing my previous blog post, working on the proposals for a client that spawned this conversation in the first place, and reading up on a lot of other people’s thoughts about this subject, I’ve got a few more positive things to say.

I’m a proponent of “Vertical Slice Architecture” code organization and a harsh critic of layered architecture approaches (Clean/Onion/Hexagonal) as they are commonly practiced, so the idea of organizing related functionality together in modules throughout the system instead of giant, horizontal layers first definitely appeals to me and I think that’s a hugely valuable shift in thinking.

I’m much more bullish on modular monoliths after thinking more about the Citadel and Outpost approach where you start with the assumption that some modules of the monolith will be spun out into separate processes later when the team feels like the service boundaries are truly settled enough to make that viable. To that end, I liked the way that Glenn Henriksen put this over the weekend:

Continuing the “Citadel” theme where you assume that you will later spawn separate “Outpost” processes later, I’m now highly concerned with building the initial system in such a way that it’s relatively easy to excise out modules to separate processes later. In the early days of Extreme Programming, we talked a little bit about the concept of “Reversibility“, which just means how easy or hard it will be to change your mind and technical direction about any given technology or approach. Likewise with the “modular monolith” approach, I actually want to think a little bit upfront about having a path to easily break out modules into separate processes or services later.

I’m probably a little more confident about introducing some level of asynchronous messaging and distributed development than some folks, so I’m going to come right out and say that I would be willing to start with some modules split into separate processes right off the bat, but ameliorate that by assuming that all these processes will have to be deployed together and will live together in one single mono-repository. To circle back to the earlier “Reversibility” theme, I think this compromise will make it much easier for teams to adjust service boundaries later as everything will be living in the same repository.

Lastly on this topic, it’s .NET-centric, but I’m hopeful that Project Aspire makes it much easier to work with this kind of distributed monolith. Likewise, I’m keeping an eye on tooling like Incrementalist as a way of better working with mono-repository codebases.

What about the Database?

There are potential dangers that might make our modular monolith decision less reversible than we’d like, but for right now let’s focus on just the database (or databases). Specifically, I’m concerned about avoiding what I’ve called the Pond Scum Anti-Pattern where a lot of different applications (or modules in our new world) float on top of a murky shared database like pond scum on brackish water in sweltering summer heat.

I grew up on farms fishing in that exact kind of farm pond, hence the metaphor:)

Taking the ideal from micro-services, I’d love it if each module had logically separate database storage such that even if they are all targeting the same physical database server, there’s some relatively easy way later to pull out the storage for an individual module and move it out later.

If scalability was an issue, I would happily go for breaking the storage for some modules out into separate databases, even though that’s a little more complexity. Some of the other folks I read in researching this topic suggested using foreign data wrappers to offload database work while still making it look to your modular monolith like it’s one big happy family database — but I personally think that’s crazy town. There’s also a very real benefit to allowing different modules to use different styles of databases or persistence based on their needs.

This probably won’t happen, but I did at least raise the possibility to a client of using event sourcing in some of their workflow-centric modules while allowing simpler modules to be remain CRUD-centric.

How Do the Modules Stay Decoupled?

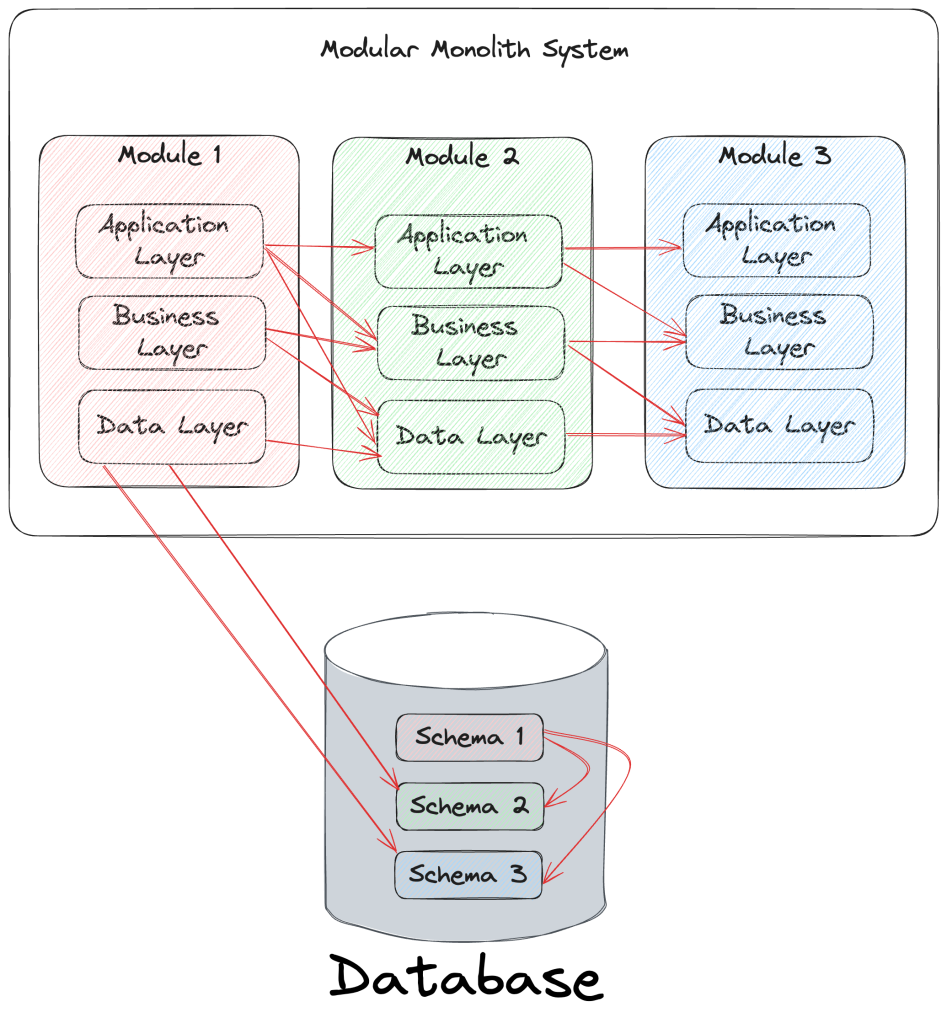

Assuming we all buy off into the idea of our modules remaining loosely coupled over time such that we have a pathway to pull them out into separate “Outpost” processes later, we absolutely don’t want many of the red arrows popping up as shown below:

Hat tip to Steve Smith for this way of describing the modularity issues.

Mechanically, my first inclination to enforce the modularity is to say that we’ll use some kind of mediator tooling like either MediatR or my own Wolverine to handle cross module interactions. That comes with its own set of complications:

- Potentially more code as is almost inevitable when purposely putting in any kind of anti-corruption layer

- What to do when one module needs the data from a second module to do its work? One answer is to use Domain Queries between modules — again, probably with some kind of mediator tool. I’ve always been dubious about that strategy because of the extra code ceremony and the simple fact that any possible technique that adds abstractions between your top level code and the raw data access code has a high tendency to cause performance problems later. If you go down this path, I’d be cognizant of the potential performance penalties and look maybe for some way to batch up queries later

- You potentially just say that if “Module 1 consistently needs access to data managed by Module 2” then you should probably merge the two modules. One fast way to get into trouble in any kind of complicated system is to organize first by different logical persisted entities rather than by operations. I think you’re far more likely to arrive at cleanly separate module boundaries by focusing on the command and query use cases of the system rather than dividing code up by entities like “Invoice” or “Order” or “Shipment.”

Just for now, I think there’s one last conversation to have about how a team will go about enforcing the usage of proper patterns and encapsulation of the various modules without devolving into a morass of the red arrows from the picture above. You could:

- Favor internalized discipline and socialized design goals by doing whatever it takes to be able to trust the developers to naturally do the right thing. Kumbaya, up with people, stop laughing at me! I think that internalized discipline will deliver better results than high ceremony approaches that try to straight jacket developers into doing the right things, but I’m prepared to be wrong on this one

- Utilize architectural tests or maybe some kind of fancy static code analysis that can spot violations of the architectural “who can talk to who” rules

- Try to separate out projects or packages for modules or parts of modules to enforce rules about “who can talk to who.” I hate this approach as part of something like the Onion Architecture, and I’m probably naturally suspicious of it inside of modular monoliths too — but at least this time you’re hopefully dividing along the lines of closely related functionality rather than organizing by broad layers first.

Summary and What’s Next

My only summary is that I’m still dubious that the modular monolith idea is going to be a panacea, but this has been helpful to me personally just to think on it much harder and see what other folks are doing and saying about this architectural style.

My next and hopefully last post in this series will be taking a look at how Wolverine and Marten do or do not lend themselves to the modular monolith approach, and what might need to be improved later in these tools.

I think this approach is a good one.

I have used the last 4 years implementing a medium large application in that way and I have had very few issues with it.

I have about 25 years of experience, and have used a lot of different approaches when implementing software, but I think this modular monolith thing is the one like the most, for now.

My approach to domains talking to each other is to create a “super domain” that talks to both of the two domains. That way you’r arrows is only pointing in 1 direction..

I have also with success used “DBUp” to handle db_schema for several domains in one datababase.

Have to admit that I also have cheated somwhat when it comes to reading data, and created “cross domain” views in the database..