JasperFx Software is open for business and offering consulting services (like helping you craft modular monolith strategies!) and support contracts for both Marten and Wolverine so you know you can feel secure taking a big technical bet on these tools and reap all the advantages they give for productive and maintainable server side .NET development.

I’ve been thinking, discussing, and writing a bit lately about the whole “modular monolith” idea, starting with Thoughts on “Modular Monoliths” and continuing onto Actually Talking about Modular Monoliths. This time out I think I’d like to just put out some demos and thoughts about where Marten and Wolverine fit well into the modular monolith idea — and also some areas where I think there’s room for improvement.

First off, let’s talk about…

Modular Configuration

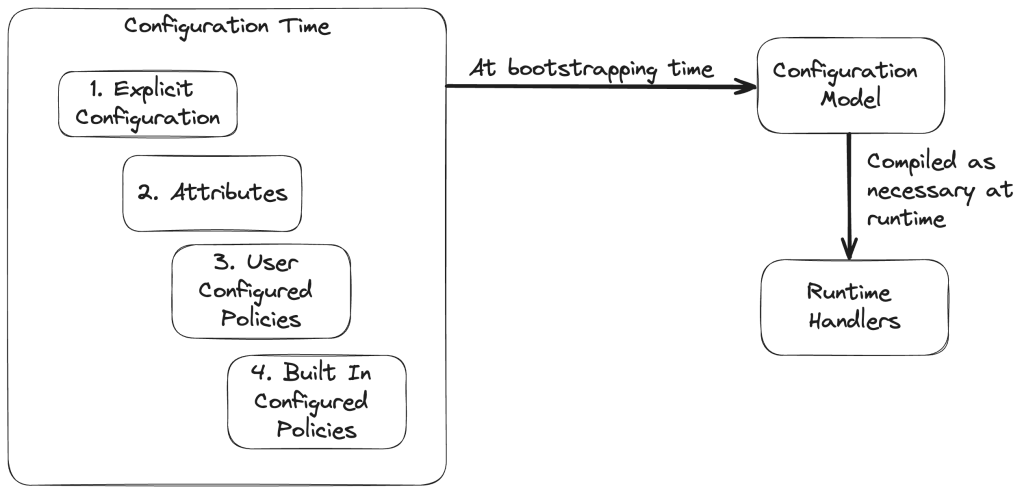

Both tools use the idea of a “configuration model” (what Marten Fowler coined a Semantic Model years ago) that is compiled and built from a combination of attributes in the code, explicit configuration, user supplied policies, and built in policies in baseline Marten or Wolverine as shown below with an indication of the order of precedence:

In code, when you as a user configure Marten and Wolverine inside of the Program file for your system like so:

builder.Services.AddMarten(opts =>

{

var connectionString = builder.Configuration.GetConnectionString("marten");

opts.Connection(connectionString);

// This will create a btree index within the JSONB data

opts.Schema.For<Customer>().Index(x => x.Region);

})

// Adds Wolverine transactional middleware for Marten

// and the Wolverine transactional outbox support as well

.IntegrateWithWolverine();

builder.Host.UseWolverine(opts =>

{

opts.CodeGeneration.TypeLoadMode = TypeLoadMode.Static;

// Let's build in some durability for transient errors

opts.OnException<NpgsqlException>().Or<MartenCommandException>()

.RetryWithCooldown(50.Milliseconds(), 100.Milliseconds(), 250.Milliseconds());

// Shut down the listener for whatever queue experienced this exception

// for 5 minutes, and put the message back on the queue

opts.OnException<MakeBelieveSubsystemIsDownException>()

.PauseThenRequeue(5.Minutes());

// Log the bad message sure, but otherwise throw away this message because

// it can never be processed

opts.OnException<InvalidInputThatCouldNeverBeProcessedException>()

.Discard();

// Apply the validation middleware *and* discover and register

// Fluent Validation validators

opts.UseFluentValidation();

// Automatic transactional middleware

opts.Policies.AutoApplyTransactions();

// Opt into the transactional inbox for local

// queues

opts.Policies.UseDurableLocalQueues();

// Opt into the transactional inbox/outbox on all messaging

// endpoints

opts.Policies.UseDurableOutboxOnAllSendingEndpoints();

// Connecting to a local Rabbit MQ broker

// at the default port

opts.UseRabbitMq();

// Adding a single Rabbit MQ messaging rule

opts.PublishMessage<RingAllTheAlarms>()

.ToRabbitExchange("notifications");

opts.LocalQueueFor<TryAssignPriority>()

// By default, local queues allow for parallel processing with a maximum

// parallel count equal to the number of processors on the executing

// machine, but you can override the queue to be sequential and single file

.Sequential()

// Or add more to the maximum parallel count!

.MaximumParallelMessages(10)

// Pause processing on this local queue for 1 minute if there's

// more than 20% failures for a period of 2 minutes

.CircuitBreaker(cb =>

{

cb.PauseTime = 1.Minutes();

cb.SamplingPeriod = 2.Minutes();

cb.FailurePercentageThreshold = 20;

// Definitely worry about this type of exception

cb.Include<TimeoutException>();

// Don't worry about this type of exception

cb.Exclude<InvalidInputThatCouldNeverBeProcessedException>();

});

// Or if so desired, you can route specific messages to

// specific local queues when ordering is important

opts.Policies.DisableConventionalLocalRouting();

opts.Publish(x =>

{

x.Message<TryAssignPriority>();

x.Message<CategoriseIncident>();

x.ToLocalQueue("commands").Sequential();

});

});

The nested lambdas in AddMarten() and UseWolverine() are configuring the MartenOptions and WolverineOptions models respectively (the “configuration model” in that diagram above).

I’m not aware of any one commonly used .NET idiom for building modular configuration, but I do commonly see folks using extension methods for IServiceCollection or IHostBuilder to segregate configuration that’s specific to a single module, and that’s what I think I’d propose. Assuming that we have a module in our modular monolith system for handling workflow around “incidents”, there might be an extension method something like this:

public static class IncidentsConfigurationExtensions

{

public static WebApplicationBuilder AddIncidentModule(this WebApplicationBuilder builder)

{

// Whatever other configuration, services, et al

// we need for just the Incidents module

// Extra Marten configuration

builder.Services.ConfigureMarten(opts =>

{

// I'm just adding an index for a document type within

// this module

opts.Schema.For<IncidentDetails>()

.Index(x => x.Priority);

// Purposely segregating all document types in this module's assembly

// to a separate database schema

opts.Policies.ForAllDocuments(m =>

{

if (m.DocumentType.Assembly == typeof(IncidentsConfigurationExtensions).Assembly)

{

m.DatabaseSchemaName = "incidents";

}

});

});

return builder;

}

}

Which would be called from the overall system’s Program file like so:

var builder = WebApplication.CreateBuilder(args);

builder.AddIncidentModule();

// Much more below...

Between them, the two main Critter Stack tools have a lot of support for modularity through:

- Wolverine’s extension and modules allow you to easily import message handlers or HTTP endpoints from other assemblies than the main application assembly

- Wolverine just got some new support for “asynchronous extensions” to allow for Wolverine modularity to be used with services like the Feature Management library in .NET

- Not coincidentally, Marten also recently got some new support for the same idea of asynchronous, modular configuration extensions specifically to support integration with services like the Feature Management library

- Both Marten and Wolverine allow you to register special extensions in your IoC container that allow you to add additional configuration to both Marten (

IConfigureMarten) and Wolverine (IWolverineExtension)

All of the facilities I described above can be used to separate specific configuration for different modules within the module code itself.

Modular Monoliths and Backing Persistence

In every single experience report you’ll ever find about a team trying to break up and modernize a large monolithic application the authors will invariably say that breaking apart the database was the single most challenging task. If we are really doing things better this time with the modular monolith approach, we’d probably better take steps ahead of time to make it easier to extract services by attempting to keep the persistence for each module at least somewhat decoupled from the persistence of other modules.

Going even farther in terms of separation, it’s not unlikely that some modules have quite different persistence needs and might be better served by using a completely different style of persistence than the other modules. Just as an example, one of our current JasperFx Software clients has a large monolithic application where some workflow-centric modules would be a good fit for an event sourcing approach, while other modules are more CRUD centric or reporting-centric where a straight up RDBMS approach is probably much more appropriate.

So let’s finally bring Marten and Wolverine into the mix and talk about the Good, the Bad, and the (sigh) Ugly of how the Critter Stack fits into modular monoliths:

wah, wah, wah…

Let’s start with a positive. Marten sits on top of the very robust PostgreSQL database. So in addition to Marten’s ability to use PostgreSQL as a document database and as an event store, PostgreSQL out of the box is a rock solid relational database. Heck, PostgreSQL even has some ability to be used as either a graph database! The point is that using the Marten + PostgreSQL combination gives you a lot of flexibility in terms of persistence style between different modules in a modular monolith without introducing a lot more infrastructure. Moreover, Wolverine can happily utilize its PostgreSQL-backed transactional outbox with both Entity Framework Core and Marten targeting the same PostgreSQL database in the same application.

Continuing with another positive, let’s say that we want to create some logical separation between our modules in the database, and one way to do so would be to simply keep Marten documents in separate database schemas for each module. Repeating a code sample from above, you can see that configuration below:

public static WebApplicationBuilder AddIncidentModule(this WebApplicationBuilder builder)

{

// Whatever other configuration, services, et al

// we need for just the Incidents module

// Extra Marten configuration

builder.Services.ConfigureMarten(opts =>

{

// Purposely segregating all document types in this module's assembly

// to a separate database schema

opts.Policies.ForAllDocuments(m =>

{

if (m.DocumentType.Assembly == typeof(IncidentsConfigurationExtensions).Assembly)

{

m.DatabaseSchemaName = "incidents";

}

});

});

return builder;

}

So, great, the storage for Marten documents could easily be segregated by schema. Especially considering there’s little or no referential integrity relationships between Marten document tables, it should be relatively easy to move these document tables to completely different databases later!

And with that, let’s move more into “Bad” or hopefully not too “Ugly” territory.

The event store data in Marten is all in one single set of tables (mt_streams and mt_events). So every module utilizing Marten’s event sourcing could be intermingling their events in just these tables through the one single, AddMarten() store for the application’s IHost. You could depend on marking event streams by their aggregate type like so:

public static async Task start_stream(IDocumentSession session)

{

// the Incident type argument is strictly a marker for

// Marten

session.Events.StartStream<Incident>(new IncidentLogged());

await session.SaveChangesAsync();

}

I think we could ameliorate this situation with a couple future changes:

- A new flag in Marten that would make it mandatory to mark every new event stream with an aggregate type specifically to make it easier to separate the events later to extract a service and its event storage

- Some kind of helper to move event streams from one database to another. It’s just not something we have in our tool belt at the moment

Of course, it would also help immeasurably if we had a way to split the event store storage for different types of event streams, but somehow that idea has never gotten any traction within Marten and never rises to the level of a high priority. Most of our discussions about sharding or partitioning the event store data has been geared around scalability — which is certainly an issue here too of course.

Marten also has its concept of “separate stores” that was meant to allow an application to interact with multiple Marten-ized databases from a single .NET process. This could be used with modular monoliths to segregate the event store data, even if targeting the same physical database in the end. The very large downside to this approach is that Wolverine’s Marten integration does not today do anything with the separate store model. So no Wolverine transactional middleware, event forwarding, transactional inbox/outbox integration, and no aggregate handler workflow. So basically everything about the full “Critter Stack” integration that makes that tooling the single most productive event sourcing development experience in all of .NET (in my obviously biased opinion). Ugly.

Randomly, I heard an NPR interview with Eli Wallach very late in his life who was the actor who played the “Ugly” character in the famous western, and I could only describe him as effusively jolly. So basically a 180 degree difference from his character!

Module to Module Communication

I’ve now spent much more time on this post than I had allotted, so it’s time to go fast…

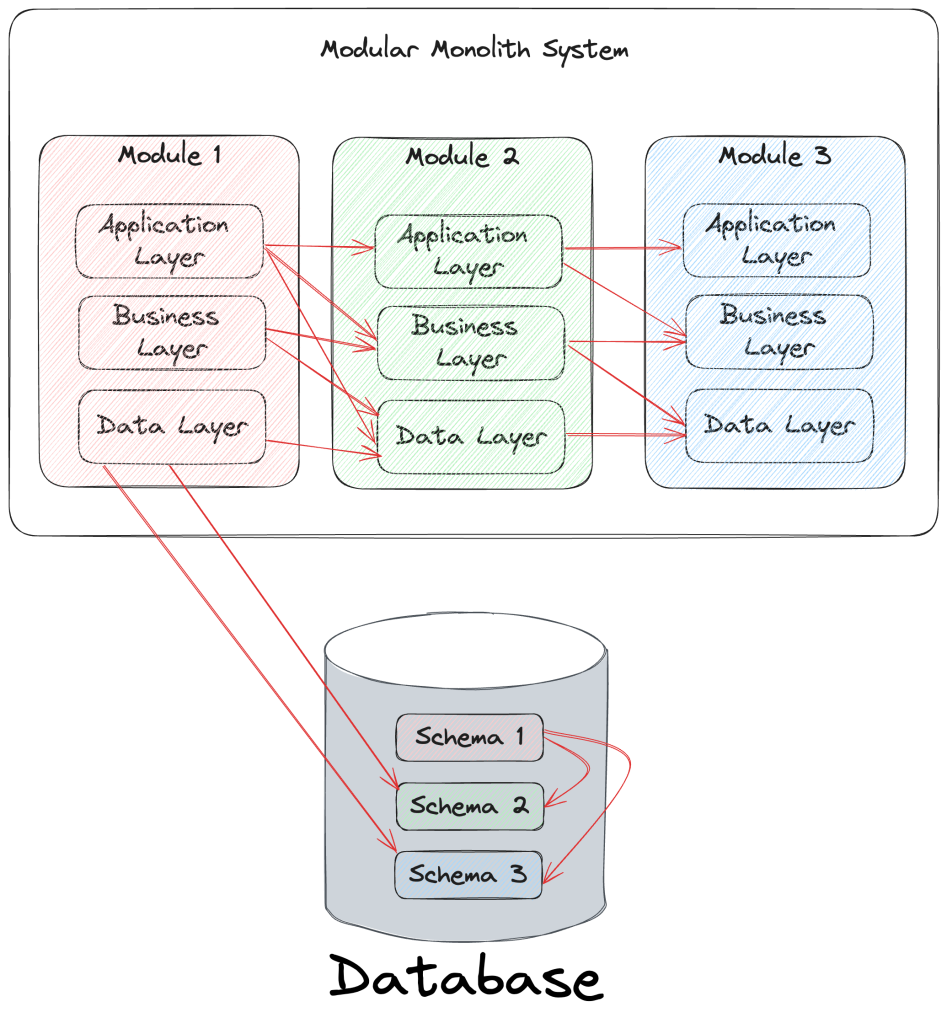

In my last post I used this diagram to illustrate the risk of coupling modules through direct usage of internals (the red arrows):

Instead of the red arrows everywhere above, I think I’m in favor of trying to limit the module to module communication to using some mix of a “mediator” tool or an in memory message bus between modules. That’s obviously going to come with some overhead, but I think (hope) that overhead is a net positive.

For a current client, I’m recommending they further utilize MediatR as they move a little more in the direction of modularity in their current monolith. For greenfield codebases, I’d recommend Wolverine instead because I think it does much, much more.

First, Wolverine has a full set of functionality to be “just a Mediator” to decouple modules from too much of the internals of another module. Secondly, Wolverine has a lot of support for background processing through local, in memory queues that could be very advantageous in modular monoliths where Wolverine can de facto be an in memory message bus. Moreover, Wolverine’s main entry point usage is identical for messages processed locally versus messages published through external messaging brokers to external processes:

public static async Task using_message_bus(IMessageBus bus)

{

// Use Wolverine as a "mediator"

// This is normally executed inline, in process, but *could*

// also be invoking this command in an external process

// and waiting for the success or failure ack

await bus.InvokeAsync(new CategoriseIncident());

// Use Wolverine for asynchronous messaging. This could

// start by publishing to a local, in process queue, or

// it could be routed to an external message broker -- but

// the calling code doesn't have to know that

await bus.PublishAsync(new CategoriseIncident());

}

The point here is that Wolverine can potentially set your modular monolith architecture up so that it’s possible to extract or move functionality out into separate services later.

All that being said about messaging or mediator tools, some of the ugliest systems I’ve ever seen utilized messaging or proto-Mediatr command handlers between logical modules. Those systems had code that was almost indecipherable by introducing too many layers and far too much internal messaging. I think I’d say that some of the root cause of the poor system code was from getting the bounded context boundaries wrong so that the messaging was too chatty. Using high ceremony anti-corruption layers also adds a lot of mental overhead to follow information flow through several mapping transformations. One of these systems was using the iDesign architectural approach that I think naturally leads to very poorly factored software architectures and too much harmful code ceremony. I do not recommend.

I guess my only point here is that no matter what well intentioned advice people like me try to give, or any theory of how to make code more maintainable any of us might have, if you find yourself saying to yourself about code that “this is way harder than it should be” you should challenge the approach and look for something different — even if that just leads you right back to where you are now if the alternatives don’t look any better.

One important point here about both modular monoliths or a micro service strategy or a mix of the two: if two or more services/modules are chatty between themselves and very frequently have to be modified at the same time, they’re best described as a single bounded context and should probably be combined into a single service or module.

Summary

Anyway, that’s enough from me on this subject for now, and this took way longer than I meant to spend on it. Time to get my nose back to the grindstone. I am certainly very open to any feedback about the Critter Stack tools limitations for modular monolith construction and any suggestions or requests to improve those tools.