I’m flying out to our main office next week and one of the big things on my agenda is talking over our practices around databases in our software projects. This blog post is just me getting my thoughts and talking points together beforehand. There are two general themes here, how I’d do things in a perfect world and how to make things better within the constraints of the organization and software architecture that have now.

I’ve been a big proponent of Agile development processes and practices going back to the early days of Extreme Programming (before Scrum came along and ruined everything about the way that Scrappy ruined Scooby Doo cartoons for me as a child). If I’m working in an Agile way, I want:

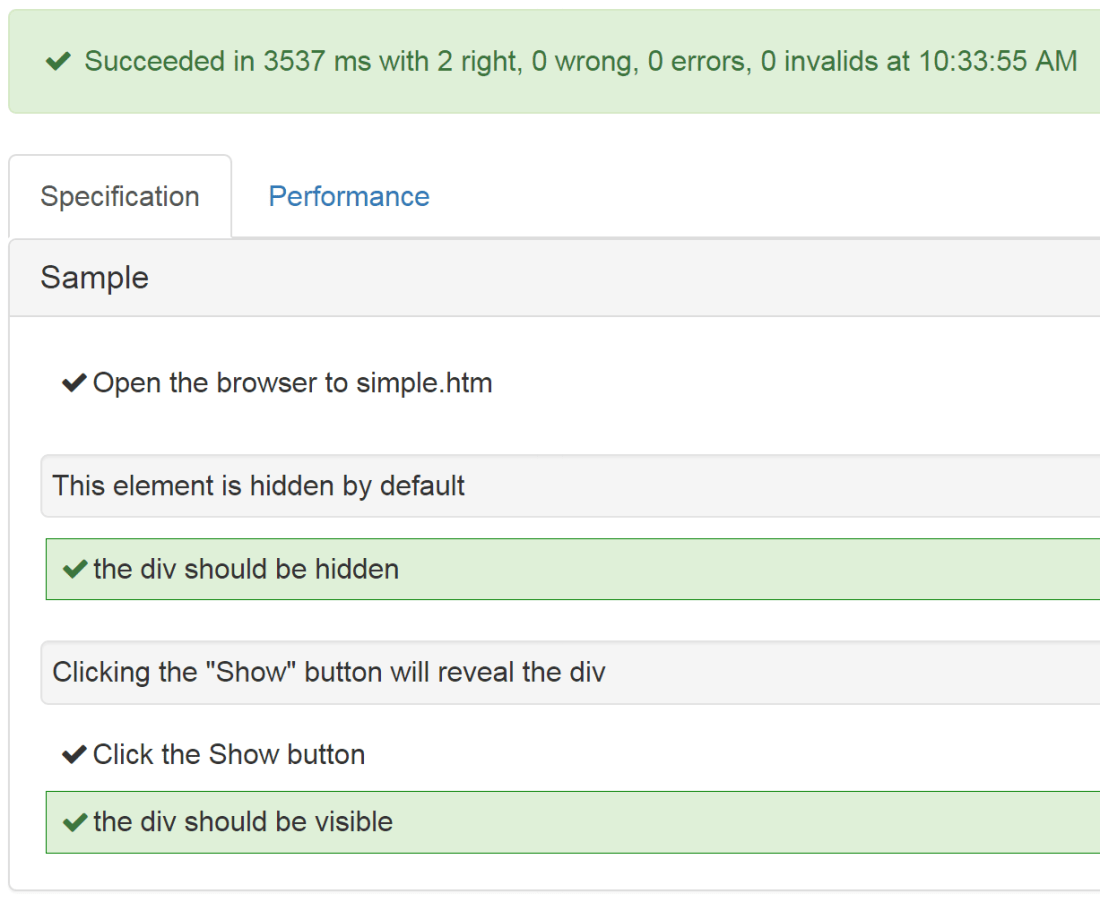

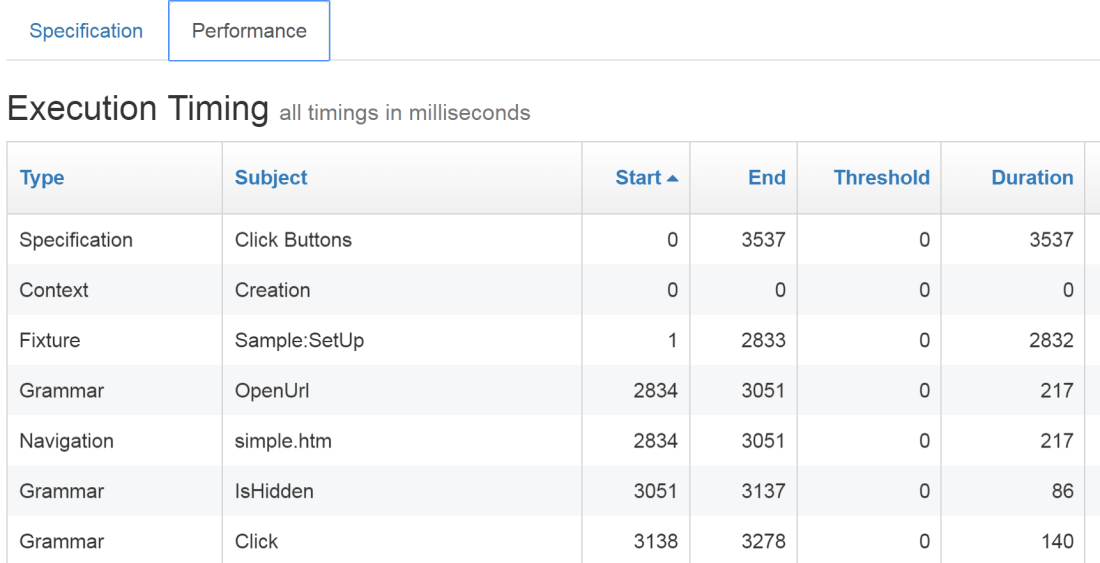

- Strong project and testing automation as feedback cycles that run against all changes to the system

- Some kind of easy traceability from a built or deployed system to exactly the version of the code and its dependencies , preferably automated through your source control processes

- Technologies, tools, and frameworks that provide high reversibility to ease the cost of doing evolutionary software design.

From the get go, relational databases have been one of the biggest challenges in the usage of Agile software practices. They’re laborious to use in automated testing, often expensive in time or money to install or deploy, the change management is a bit harder because you can’t just replace the existing database objects the way we can with other code, and I absolutely think it’s reduces reversibility in your system architecture compared to other options. That being said, there are some practices and processes I think you should adopt so that your Agile development process doesn’t crash and burn when a relational database is involved.

Keep Business Logic out of the Database, Period.

I’m strongly against having any business logic tightly coupled to the underlying database, but not everyone feels the same way. For one reason, stored procedure languages (tSQL, PL/SQL, etc.) are very limited in their constructs and tooling compared to the languages we use in our application code (basically anything else). Mostly though, I avoid coupling business logic to the database because having to test through the database is almost inevitably more expensive both in developer effort and test run times than it would be otherwise.

Some folks will suggest that you might want to change out your database later, but to be honest, the only time I’ve ever done that in real life is when we moved from RavenDb to Marten where it had little impact on the existing structure of the code.

In practice this means that I try to:

- Eschew usage of stored procedures. Yes, I think there are still some valid reasons to use sprocs, but I think that they are a “guilty until proven innocent” choice in almost any scenario

- Pull business logic away from the database persistence altogether whenever possible. I think I’ll be going back over some of my old designing for testability blog posts from the Codebetter/ALT.Net days to try to explain to our teams that “wrap the database in an interface and mock it” isn’t always the best solution in every case for testability

- Favor persistence tools that invert the control between the business logic and the database over tooling like Active Record that creates a tight coupling to the database. What this means is that instead of having business logic code directly reading and writing to the database, something else (Dapper if we can, EF if we absolutely have to) is responsible for loading and persisting application state back and forth between the domain in code and the underlying database. The point is to be able to completely test your business logic in complete isolation from the database.

I would make exceptions for use cases where using the database engine to do set based logic in a stored procedure is a more efficient way to solve the problem, but I haven’t been involved in systems like that for a long time.

Database per Developer/Tester/Environment

My very strong preference and recommendation is to have each developer, tester, and automated testing environment using a completely separate database. The key reason is to isolate each thread of team activity to avoid simultaneous operations or database changes from interfering with each other. Sharing the database makes automated testing much less effective because you often get false negatives or false positives from database activity going on somewhere else at the same time — and yes, this really does happen and I’ve got the scars to prove it.

Additionally, it’s really important for automated testing to be able to tightly control the inputs to a test. While there are some techniques you can use to do this in a shared database (multi-tenancy usage, randomized data), it’s far easier mechanically to just have an isolated database that you can easily control.

Lastly, I really like being able to look through the state of the database after a failed test. That’s certainly possible with a shared database, but it’s much easier in my opinion to look through an isolated database where it’s much more obvious how your code and tests changed the database state.

I should say that I’m concerned here with logical separation between different threads of activity. If you do that with truly separate databases or separate schemas in the same database, it serves the same goal.

“The” Database vs. Application Persistence

There are two basic development paradigms to how we think about databases as part of a software system:

- The database is the system and any other code is just a conduit to get data back and forth from the database and its consumers

- The database is merely the state persistence subsystem of the application

I strongly prefer and recommend the 2nd way of looking at that, and act accordingly. That’s a admittedly a major shift in thinking from traditional software development or database centric teams.

In practice, this generally means that I very strongly favor the concept of an application database that is only accessed by one application and can be considered to be just part of the application. In this case, I would opt to have all of the database DDL scripts and migrations in the source control repository for the application. This has a lot of benefits for development teams:

- It makes it dirt simple to correlate the database schema changes to the rest of the application code because they’re all versioned together

- Automated testing is easier within continuous integration builds becomes easier because you know exactly what scripts to apply to the database before running the tests

- No need for elaborate cascading builds in your continuous integration setup because it’s just all together

In contrast, a shared database that’s accessed by multiple applications is a lot more potential friction. The version tracking between the two moving parts is harder to understand and it harms your ability to do effective automated testing. Moreover, it’s wretchedly nasty to allow lots of different applications to float on top of the same database in what I call the “pond scum anti-pattern” because it inevitably causes nasty coupling issues that will almost result in regression bugs due to it being so much harder to understand how changes in the database will ripple out to the applications sharing the database. A much, much younger version of myself walked into a meeting and asked our “operational data store” folks to add a column to a single view and got screamed at for 30 minutes straight on why that was going to be impossible and do you know how much work it’s going to be to test everything that uses that view young man?

Assuming that you absolutely have to continue to use a shared database like my shop does, I’d at least try to ameliorate that by:

- Make damn sure that all changes to that shared database schema are captured in source control somewhere so that you have a chance at effective change tracking

- Having a continuous integration build for the shared database that runs some level of regression tests and then subsequently cascades to all of the applications that touch that database being automatically updated and tested against the latest version of the shared database. I’m expecting some screaming when I recommend that in the office next week;-)

- At the least, have some mechanism for standing up a local copy of the up to date database schema with any necessary baseline data on demand for isolated testing

- Some way to know when I’m running or testing the dependent applications exactly what version of the database schema repository I’m currently using. Git submodules? Distribute the DB via Nuget? Finally do something useful with Docker, distribute the DB as a versioned Docker image, and brag about that to any developer we meet?

The key here is that I want automated builds constantly running as feedback mechanisms to know when and what database changes potentially break (or fix too!) one of our applications. Because of some bad experiences in the past, I’m hesitant to use cascading builds between separate repositories, but it’s definitely warranted in this case until we can get the big central database split up.

At the end of the day, I still think that the shared database architecture is a huge anti-pattern that most shops should try to avoid and I’d certainly like to see us start moving away from that model more and more.

Document Databases over Relational Databases

I’ve definitely put my money where my mouth is on this (RavenDb early on, and now Marten). In my mind, evolutionary or incremental software design is much easier with document databases for a couple reasons:

- Far fewer changes in the application code result in database schema changes

- It’s much less work to keep the application and database in sync because the storage just reflects the application model

- Less work in the application code to transform the database storage to structures that are more appropriate for the business logic. I.e., relational databases really aren’t great when your domain model is logically hierarchical rather than flat

- It’s a lot less work to tear down and set up known test input states in document databases. With a relational database you frequently end up having to deal with extraneous data you don’t really care about just to satisfy relational integrity concerns. Likewise, tearing down relational database state takes more care and thought than it does with a document database.

I would still opt to use a relational database for reporting or if there’s a lot of set based logic in your application. For simpler CRUD applications, I think you’re fine with just about any model and I don’t object to relational databases in those cases either.

It sounds trivial, but it does help tremendously if your relational database tables are configured to use cascading deletes when you’re trying to set a database into a known state for tests.

Team Organization

My strong preference is to have a completely self-contained team that has the ability and authority to make any and all changes to their application database, and that’s most definitely been valid in my experience. Have the database managed and owned separately from the development team is a frequent source of friction and definitely a major hit to your reversibility that forces you to do more potentially wrong, upfront design work. It’s much worse when that separate team does not share your priorities or simply works on a very different release schedule. I think it’s far better for a team to own their database — or at the very worst, have someone who is allowed to touch the database in the team room and team standup’s.

If I had full control over an organization, I would not have a separate database team. Keeping developers and database folks on separate team makes your team have to spend more time on inter-team coordination, takes away from the team’s flexibility in deciding what they can deliver, and almost inevitably causes a bottleneck constraint for projects. Even worse in my mind is when neither the developers nor the database team really understand how their work impacts the other team.

Even if we say that we have a matrix organization, I want the project teams to have primacy over functional teams. To go farther, I’d opt to make functional teams (developers, testers, DBA’s) be virtual teams solely for the purpose of skill acquisition, knowledge sharing, and career growth. My early work experience was being an engineer within large petrochemical project teams, and the project team dominant matrix organization worked a helluva lot better than it did at my next job in enterprise IT that focused more on functional teams.

As an architect now rather than a front line programmer, I constantly worry about not being able to feel the “pain” that my decisions and shared libraries cause developers because that pain is an important feedback mechanism to improve the usability of our shared infrastructure or application architecture. Likewise, I worry that having a separate database team creates a situation where they’re not very aware of the impact of their decisions on developers or vice versa. One of the very important lessons I was taught as an engineer was that it was very important to understand how other engineering disciplines work and what they needed so that we could work better with them.

Now though, I do work in a shop that has historically centralized the control of the database in a centralized database team. To mitigate the problems that naturally arise from this organizational model, we’re trying to have much more bilateral conversations with that team. If we can get away with this, I’d really like to see members of that team spend more time in the project team rooms. I’d also love it if we could steal a page from my original engineering job (Bechtel) and suggest some temporary rotations between the database and developer teams to better appreciate how the other half of that relationship works and what their needs are.