After determining that I wasn’t going to be able to easily move the old FubuMVC codebase to the CoreCLR, I’ve been furiously working on the long proposed and delayed successor to FubuMVC that’s going to be called “Jasper.” I’m trying to get in front of a team doing CoreCLR development at work with a working MVP feature set in the next couple weeks. I’m needing to bring a couple other folks from my shop on to help out and a few folks have been asking what I’m up to just because of the sudden flurry of Github activity, so here’s a big ol’ braindump of the roadmap and architectural direction so far.

First, why do this at all instead of switching to another existing service bus?

- We’re happy with how FubuMVC’s service bus support has worked out

- We need to be “wire compatible” with FubuMVC

- We want to do CoreCLR development right now, and NSB/MassTransit isn’t there yet

- Jasper will be “xcopy deployable,” which we’ve found to be very advantageous for both development and automated testing

- Because I want to — but don’t let my boss hear that

The Vision

Jasper is a next generation application development framework for distributed server side development in .Net (think service bus now and HTTP services later). Jasper is being built on the CoreCLR as a replacement for a small subset of the older FubuMVC tooling. Roughly stated, Jasper intends to keep the things that have been successful in FubuMVC, ditch the things that weren’t, and make the runtime pipeline be much more performant. Oh, and make the stack traces from failures within the runtime pipeline be a whole lot simpler to read — and yes, that’s absolutely worth being one of the main goals.

The current thinking is that we’d have these libraries/Nugets:

- Jasper – The core assembly that will handle bootstrapping, configuration, and the Roslyn code generation tooling

- JasperBus – The service bus features from FubuMVC and an alternative to MediatR

- JasperDiagnostics – Runtime diagnostics meant for development and testing

- JasperStoryteller – Support for hosting Jasper applications within Storyteller specification projects.

- JasperHttp (later) – Build HTTP micro-services on top of ASP.Net Core in a FubuMVC-esque way.

- JasperQueues (later) – JasperBus is going to use LightningQueues as its

primary transport mechanism, but I’d possibly like to re-architect that code to a new library inside of Jasper. This library will not have any references or coupling to any other Jasper project. - JasperScheduler (proposed for much later) – Scheduled or polling job support on top of JasperBus

The Core Pipeline and Roslyn

The basic goal of Jasper is to provide a much more efficient and improved version of the older FubuMVC architecture for CoreCLR development that is also “wire compatible” with our existing FubuMVC 3 services on .Net 4.6.

The original, core concept of FubuMVC was what we called the Russion Doll Model and is now mostly refered to as middleware. The Russian Doll Model architecture makes it relatively easy for developers to reuse code for cross cutting concerns like validation or security without having to write nearly so much explicit code. At this point, many other .Net frameworks support some kind of Russian Doll Model architecture like ASP.Net Core’s middleware or the Behavior model in NServiceBus.

In FubuMVC, that consisted of a couple parts:

- A runtime abstraction for middleware called

IActionBehaviorfor every step in the runtime pipeline for processing an HTTP request or service bus message. Behavior’s were a linked list chain from outermost behavior to innermost. This model was also adapted from FubuMVC into NServiceBus. - A configuration time model we called the

BehaviorGraphthat expressed all the routes and service bus message handling chains of behaviors in the system. This configuration time model made it possible to apply conventions and policies that established what exact middleware ran in what order for each message type or HTTP route. This configuration model also allowed FubuMVC to expose diagnostic visualizations about each chain that was valuable for troubleshooting problems or just flat out understanding what was in the system to begin with.

Great, lots of flexibility and some unusual diagnostics, but the FubuMVC model gets a lot uglier when you go to an “async by default” execution pipeline. Maybe more importantly, it suffers from too many object allocations because of all the little objects getting created on every message or HTTP request that hurt performance and scalability. Lastly, it makes for some truly awful stack traces when things go wrong because of all the bouncing between behaviors in the nested handler chain.

For Jasper, we’re going to keep the configuration model (but simplified), but this time around we’re doing some code generation at runtime to “bake” the execution pipeline in a much tighter package, then use the new runtime code compilation capabilitites in Roslyn to generate assemblies on the fly.

As part of that, we’re trying every possible trick we can think of to reduce object allocations and minimize the work being done at runtime by the underlying IoC container. The NServiceBus team did something very similar with their version of middleware and claimed an absolutely humongous improvement in throughput, so we’re very optimistic about this approach.

What’s with the name?

I think that FubuMVC turned some people off by its name (“for us, by us”). This time around I was going for an unassuming name that was easy to remember and just named it after my hometown (Jasper, MO).

JasperBus

The initial feature set looks to be:

- Running decoupled commands ala MediatR

- In memory transport

- LightningQueues based transport

- Publish/Subscribe messaging

- Request/Reply messaging patterns

- Dead letter queue mechanics

- Configurable error handling rules

- The “cascading messages” feature from FubuMVC

- Static message routing rules

- Subscriptions for dynamic routing — this time we’re looking at using [Consul(https://www.consul.io/)] for the underlying storage

- Delayed messages

- Batch message processing

- Saga support (later) — but this is going to be a complete rewrite from FubuMVC

There is no intention to add the polling or scheduled job functionality that was in FubuMVC to Jasper.

JasperDiagnostics

We haven’t detailed this one out much, but I’m thinking it’s going to be a completely encapsulated ASP.Net Core application using Kestrel to serve some diagnostic views of a running Jasper application. As much as anything, I think this project is going to be a test bed for my shop’s approach to React/Redux and an excuse to experiment with the Apollo client with or without GraphQL. The diagnostics should expose both a static view of the application’s configuration and a live tracing of messages or HTTP requests being handled.

JasperStoryteller

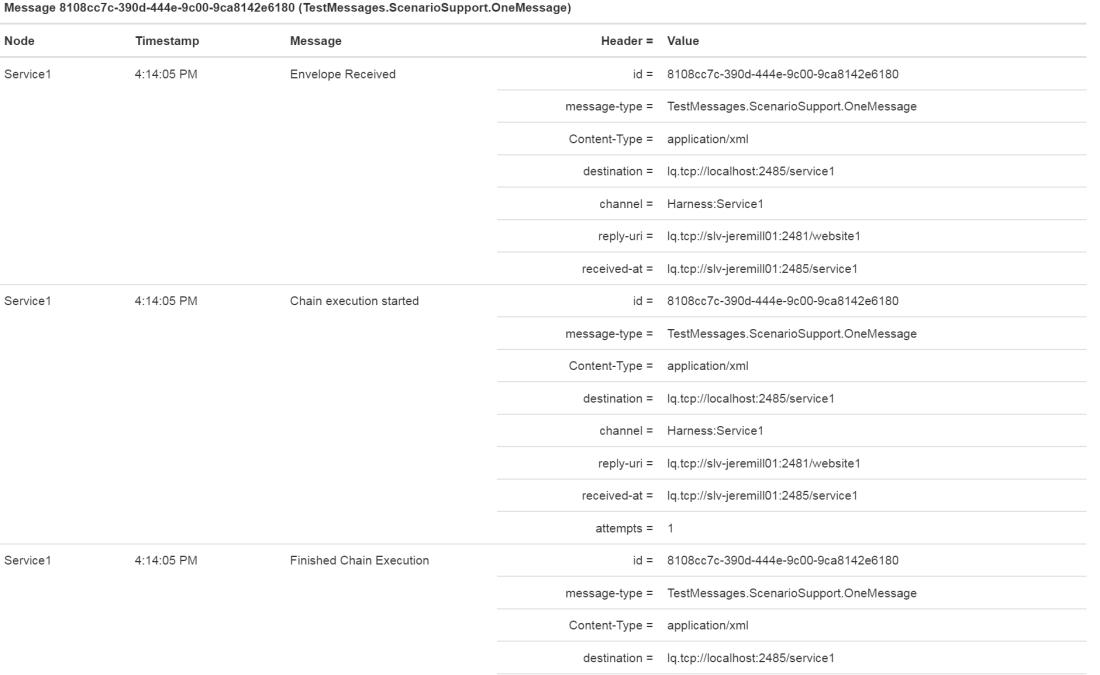

This library won’t do too much, but we’ll at least want a recipe for being able to bootstrap and teardown a Jasper application in Storyteller test harnesses. At a minimum, I’d like to expose a bit of diagnostics on the service bus activity during a Storyteller specification run like we did with FubuMVC in the Storyteller specification results HTML.

JasperHttp

We’re embracing ASP.net Core MVC at work, so this might just be a side project for fun down the road. The goal here is just to provide a mechanism for writing micro-services that expose HTTP endpoints. The I think the potential benefits over MVC are:

- Less ceremony in writing HTTP endpoints (fewer attributes, no required base classes, no marker interfaces, no fluent interfaces)

- The runtime model will be much leaner. We think that we can make Jasper about as efficient as writing purely explicit, bespoke code directly on top of ASP.Net Core

- Easier testability

A couple folks have asked me about the timing on this one, but I think mid-summer is the earliest I’d be able to do anything about it.

JasperScheduler

If necessary, we’ll have another “Feature” library that extends JasperBus with the ability to schedule user supplied jobs. The intention this time around is to just use Quartz as the actual scheduler.

JasperQueues

This is a giant TBD

IoC Usage Plans

Right now, it’s going to be StructureMap 4.4+ only. While this will drive some folks away, it makes the tool much easier to build. Besides, Jasper is already using some StructureMap functionality for its own configuration. I think that we’re only positioning Jasper for greenfield projects (and migration from FubuMVC) anyway.

Regardless, the IoC usage in Jasper is going to be simplistic compared to what we did in FubuMVC and certainly less entailed than the IoC abstractions in ASP.net MVC Core. We theorize that this should make it possible to slip in the IoC container of your choice later.