Multitenancy is a reference to the mode of operation of software where multiple independent instances of one or multiple applications operate in a shared environment. The instances (tenants) are logically isolated, but physically integrated.

Gartner Glossary

In this case, I’m referring to “multi-tenancy” in regards to Marten‘s ability to deploy one logical system where the data for each client, organization, or “tenant” is segregated such that users are only ever reading or writing to their own tenant’s data — even if that data is all stored in the same database.

In my research and experience, I’ve really only seen three main ways that folks handle multi-tenancy at the database layer (and this is going to be admittedly RDBMS-centric here):

- Use some kind of “tenant id” column in every single database table, then do something behind the scenes in the application layer to always be filtering on that column based on the current user. Marten has supported what I named the “Conjoined” model* since very early versions.

- Separate database schema per tenant within the same database. This model is very unlikely to ever be supported by Marten because Marten compiles database schema names into generated code in many, many places.

- Using a completely separate database per tenant with identical structures. This approach gives you the most complete separation of data between tenants, and could easily give your system much more scalability when the database is your throughput bottleneck. While you could — and many folks did — roll your own version of “tenant per database” with Marten, it wasn’t supported out of the box.

But, drum roll please, Marten V5 that dropped just last week adds out of the box support for doing multi-tenancy with Marten using a separate database for each tenant. Let’s just right into the simplest possible usage. Let’s say that we have a small system where all we want:

- Tenants “tenant1” and “tenant2” to be stored in a database named “database1”

- Tenant “tenant3” should be stored in a database named “tenant3”

- Tenant “tenant4” should be stored in a database named “tenant4”

And that’s that. Just three databases that are known at bootstrapping time. Jumping into the configuration code in a small .Net 6 web api projection gives us this code:

var builder = WebApplication.CreateBuilder(args);

var db1ConnectionString = builder.Configuration

.GetConnectionString("database1");

var tenant3ConnectionString = builder.Configuration

.GetConnectionString("tenant3");

var tenant4ConnectionString = builder.Configuration

.GetConnectionString("tenant4");

builder.Services.AddMarten(opts =>

{

opts.MultiTenantedDatabases(x =>

{

// Map multiple tenant ids to a single named database

x.AddMultipleTenantDatabase(db1ConnectionString,"database1")

.ForTenants("tenant1", "tenant2");

// Map a single tenant id to a database, which uses the tenant id as well for the database identifier

x.AddSingleTenantDatabase(tenant3ConnectionString, "tenant3");

x.AddSingleTenantDatabase(tenant4ConnectionString,"tenant4");

});

// Register all the known document types just

// to enable database schema management

opts.Schema.For<User>()

// This is *only* necessary if you want to put more

// than one tenant in one database. Which we did.

.MultiTenanted();

});

Now let’s see this in usage a bit. Knowing that the variable theStore in the test below is the IDocumentStore registered in our system with the configuration code above, this test shows off a bit of the multi-tenancy usage:

[Fact]

public async Task can_use_bulk_inserts()

{

var targets3 = Target.GenerateRandomData(100).ToArray();

var targets4 = Target.GenerateRandomData(50).ToArray();

await theStore.Advanced.Clean.DeleteAllDocumentsAsync();

// This will load new Target documents into the "tenant3" database

await theStore.BulkInsertDocumentsAsync("tenant3", targets3);

// This will load new Target documents into the "tenant4" database

await theStore.BulkInsertDocumentsAsync("tenant4", targets4);

// Open a query session for "tenant3". This QuerySession will

// be connected to the "tenant3" database

using (var query3 = theStore.QuerySession("tenant3"))

{

var ids = await query3.Query<Target>().Select(x => x.Id).ToListAsync();

ids.OrderBy(x => x).ShouldHaveTheSameElementsAs(targets3.OrderBy(x => x.Id).Select(x => x.Id).ToList());

}

using (var query4 = theStore.QuerySession("tenant4"))

{

var ids = await query4.Query<Target>().Select(x => x.Id).ToListAsync();

ids.OrderBy(x => x).ShouldHaveTheSameElementsAs(targets4.OrderBy(x => x.Id).Select(x => x.Id).ToList());

}

}

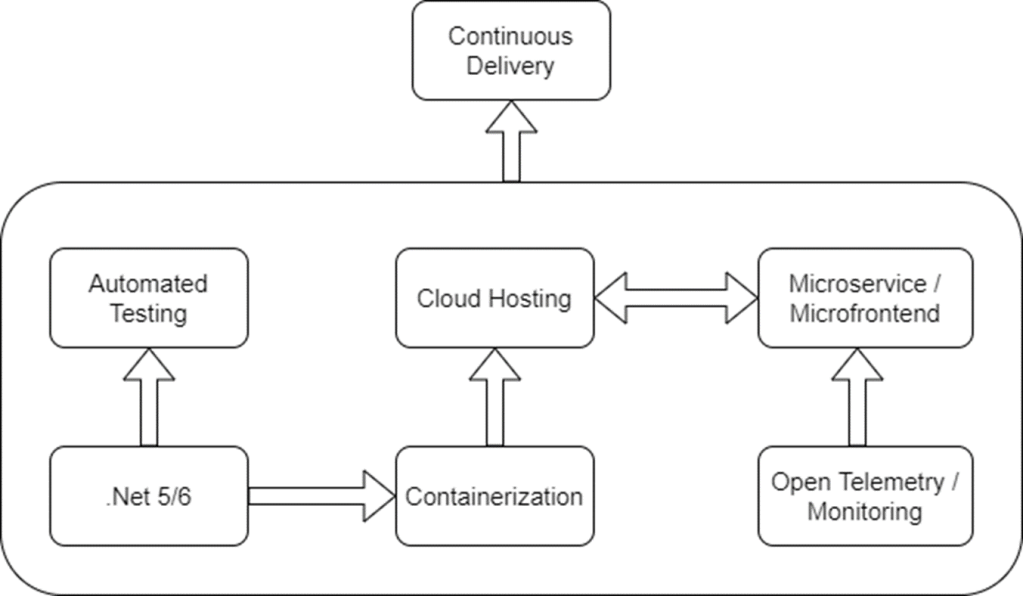

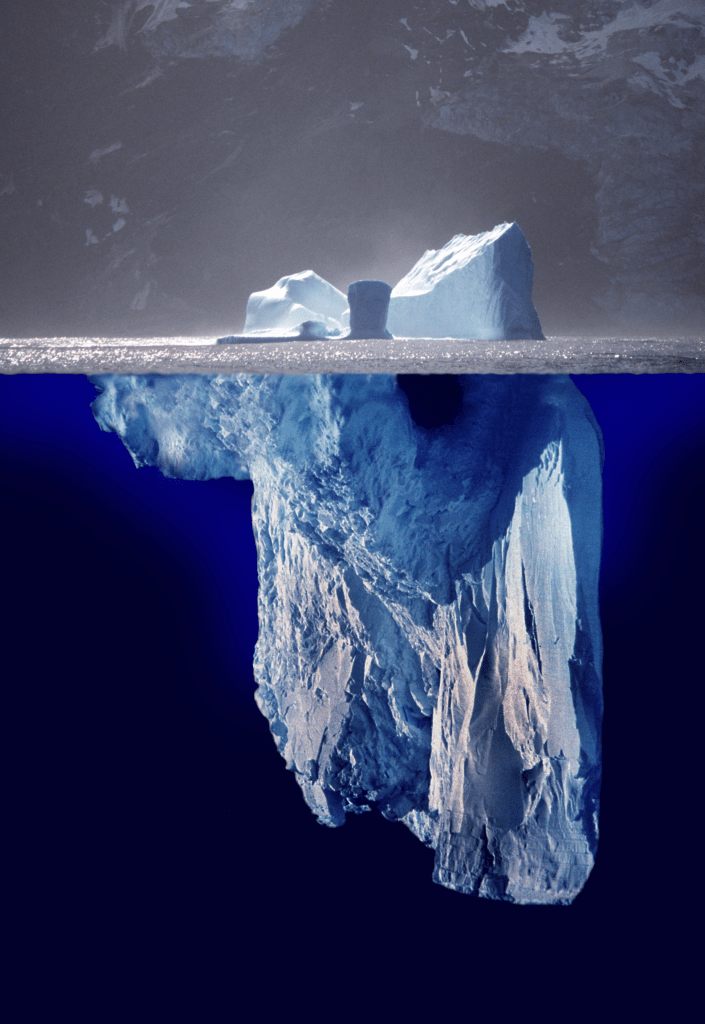

So far, so good. There’s a little extra configuration in this case to express the mapping of tenants to database, but after that, the mechanics are identical to the previous “Conjoined” multi-tenancy model in Marten. However, as the next set of questions will show, there was a lot of thinking and new infrastructure code under the visible surface because Marten can no longer assume that there’s only one database in the system.

To dive a little deeper, I’m going to try to anticipate the questions a user might have about this new functionality:

Is there a DocumentStore per database, or just one?

DocumentStore is a very expensive object to create because of the dynamic code compilation that happens within it. Fortunately, with this new feature set, there is only one DocumentStore. The one DocumentStore does store the database schema difference detection by database though.

How much can I customize the database configuration?

The out of the box options for “database per tenant” configuration are pretty limited, and we know that they won’t cover every possible need of our users. No worries though, because this is pluggable by writing your own implementation of our ITenancy interface, then setting that on StoreOptions.Tenancy as part of your Marten bootstrapping.

For more examples, here’s the StaticMultiTenancy model that underpins the example usage up above. There’s also the SingleServerMultiTenancy model that will dynamically create a named database on the same database server for each tenant id.

To apply your custom ITenancy model, set that on StoreOptions like so:

var store = DocumentStore.For(opts =>

{

// NO NEED TO SET CONNECTION STRING WITH THE

// Tenancy option below

//opts.Connection("connection string");

// Apply custom tenancy model

opts.Tenancy = new MySpecialTenancy();

});

Is it possible to mix “Conjoined” multi-tenancy with multiple databases?

Yes, it is, and the example code above tried to show that. You’ll still have to mark document types as MultiTenanted() to opt into the conjoined multi-tenancy in that case. We supported that model thinking that this would be helpful for cases where the logical tenant of an application may have suborganizations. Whether or not this ends up being useful is yet to be proven.

What about the “Clean” functionality?

Marten has some built in functionality to reset or teardown database state on demand that is frequently used for test automation (think Respawn, but built into Marten itself). With the introduction of database per tenant multi-tenancy, the old IDocumentStore.Advanced.Clean functionality had to become multi-database aware. So when you run this code:

// theStore is an IDocumentStore

await theStore.Advanced.Clean.DeleteAllDocumentsAsync();

Marten is deleting all the document data in every known tenant database. To be more targeted, we can also “clean” a single database like so:

// Find a specific database

var database = await store.Storage.FindOrCreateDatabase("tenant1");

// Delete all document data in just this database

await database.DeleteAllDocumentsAsync();

What about database management?

Marten tries really hard to manage database schema changes for you behind the scenes so that your persistence code “just works.” Arguably the biggest task for per database multi-tenancy was enhancing the database migration code to support multiple databases.

If you’re using the Marten command line support for the system above, this will apply any outstanding database changes to each and every known tenant database:

dotnet run -- marten-apply

But to be more fine-grained, we can choose to apply changes to only the tenant database named “database1” like so:

dotnet run -- marten-apply --database database1

And lastly, you can interactively choose which databases to migrate like so:

dotnet run -- marten-apply -i

In code, you can direct Marten to detect and apply any outstanding database migrations (between how Marten is configured in code and what actually exists in the underlying database) across all tenant database upon application startup like so:

services.AddMarten(opts =>

{

// Marten configuration...

}).ApplyAllDatabaseChangesOnStartup();

The migration code above runs in an IHostedService upon application startup. To avoid collisions between multiple nodes in your application starting up at the same time, Marten uses a Postgresql advisory lock so that only one node at a time can be trying to apply database migrations. Lesson learned:)

Or in your own code, assuming that you have a reference to an IDocumentStore object named theStore, you can use this syntax:

// Apply changes to all tenant databases

await theStore.Storage.ApplyAllConfiguredChangesToDatabaseAsync();

// Apply changes to only one database

var database = await theStore.Storage.FindOrCreateDatabase("database1");

await database.ApplyAllConfiguredChangesToDatabaseAsync();

Can I execute a transaction across databases in Marten?

Not natively with Marten, but I think you could pull that off with TransactionScope, and multiple Marten IDocumentSession objects for each database.

Does the async daemon work across the databases?

Yes! Using the IHost integration to set up the async daemon like so:

services.AddMarten(opts =>

{

// Marten configuration...

})

// Starts up the async daemon across all known

// databases on one single node

.AddAsyncDaemon(DaemonMode.HotCold);

Behind the scenes, Marten is just iterating over all the known tenant databases and actually starting up a separate object instance of the async daemon for each database.

We don’t yet have any way of distributing projection work across application nodes, but that is absolutely planned.

Can I rebuild a projection by database? Or by all databases at one time?

Oopsie. In the course of writing this blog post I realized that we don’t yet support “database per tenant” with the command line projections option. You can create a daemon instance programmatically for a single database like so:

// Rebuild the TripProjection on just the database named "database1"

using var daemon = await theStore.BuildProjectionDaemonAsync("database1");

await daemon.RebuildProjection<TripProjection>(CancellationToken.None);

*I chose the name “Conjoined,” but don’t exactly remember why. I’m going to claim that that was taken from the “Conjoiner” sect from Alistair Reynolds “Revelation Space” series.