Alright, so there are hundreds of blog posts out there that explain ASP.Net Core fundamentals and libraries because it’s an “MVP bait” technology (but not so many that ASP.Net Core is adequately “googleable” yet in my opinion, so feel to write more of those). For my part, I’ve been wanting to take a much deeper dive into ASP.Net Core with and without MVC and write a series of critical reviews of the internals and the design decisions behind them.

What am I hoping to accomplish?

- My shop is already well underway in our plunge into ASP.Net Core and I’m needing to be able to support our teams that are using the new stack

- I’m still planning on writing the next generation “Jasper” replacement for FubuMVC that will just be a part of the ASP.Net Core ecosystem

- I don’t know if this is really useful to everybody, but I frequently find that the best way for me to really learn a development library or framework is to imagine how I would go about building it myself

- I just find this kind of thing interesting

If you stumble into this with no idea who I am or why I’m arrogant enough to think I’m qualified to potentially criticize the ASP.Net Core internals, I’m the primary author of both StructureMap (the IoC container) and an alternative “previous generation” OSS web development framework called FubuMVC that tackled a lot of the same problems that ASP.Net Core addresses now that were not part of older ASP.Net MVC or Web API. I’ve also spent a couple years planning out a successor to FubuMVC. I think I can add something to the conversation by contrasting ASP.Net Core with what I did in FubuMVC or other OSS alternatives, how it’s different from Web API or older MVC, and how I’d want to do it all differently in Jasper.

If you do know who I am, don’t worry, I’ll be much more positive than you might think because there are plenty of things I like in the new ASP.Net Core stack. For those of you who don’t know me from Adam, I’m likely to be far more critical than a .Net trainer, consultant, or MVP who frankly has no incentive whatsoever to offer up any kind of negativity.

First Impressions and Topics

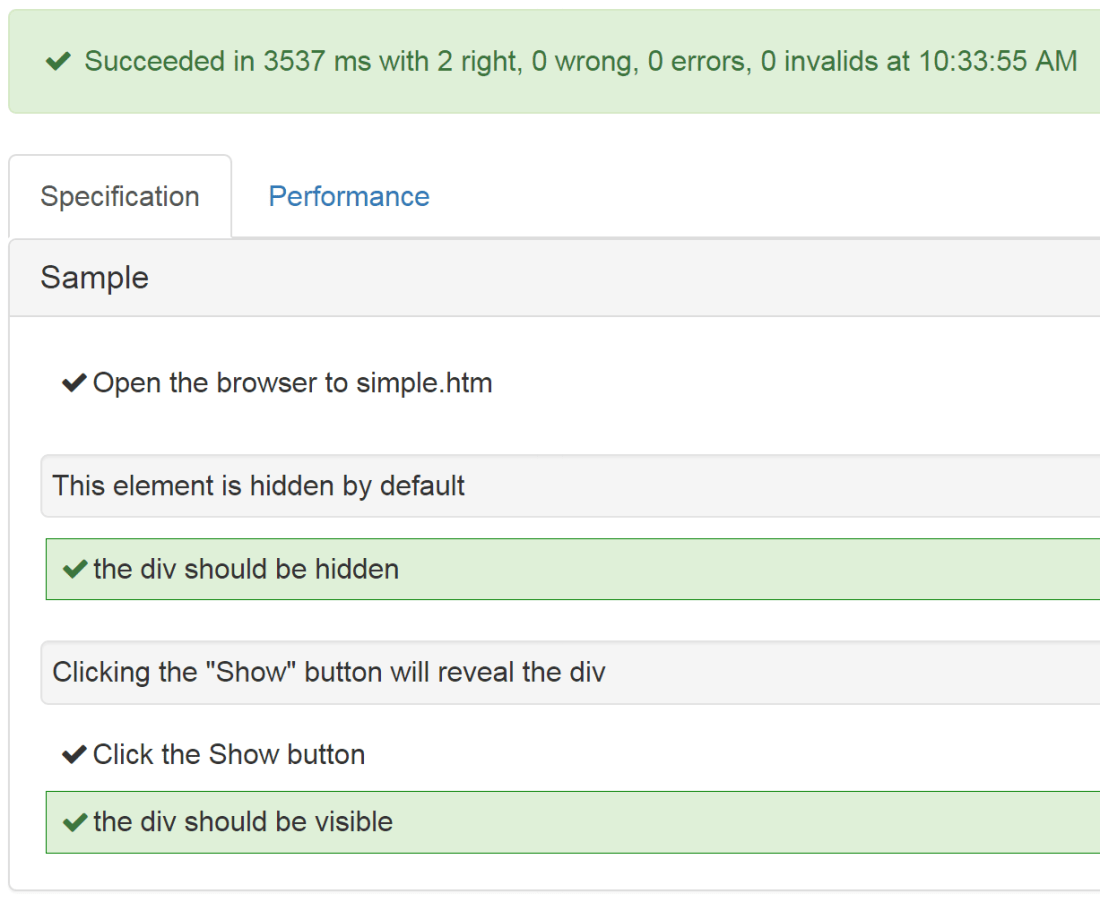

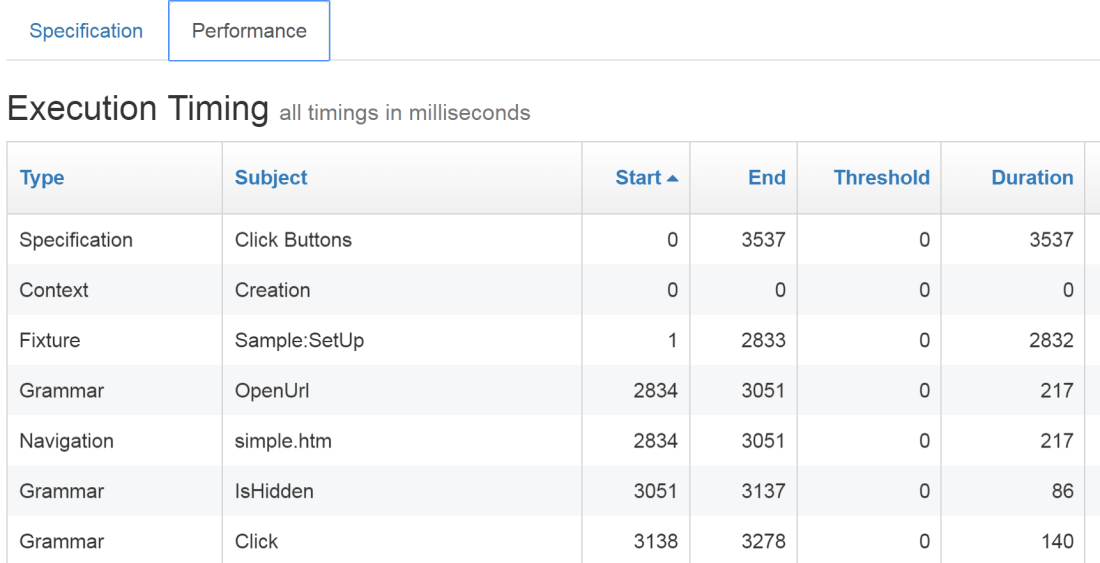

I’ve been working with ASP.Net Core since the fall, but I’ve only been going deep into it over the past couple weeks getting some Storyteller extensions ready for test automation in our shop. Roughly speaking, I’m mostly positive about the core ASP.Net Core foundation and somewhat dubious about ASP.Net Core MVC.

This list is just the topics I’m thinking of writing about with my first impressions.

- Kestrel – It rocks and I think it’s a big improvement over Katana

- Routing – I thought that the routing support and the way that it connected to Controller actions was one of the weakest spots in MVC “Classic.” The attribute-based routing might be an improvement, but I hate how it clutters up the code and the internals for it in ASP.Net Core look unnecessarily complicated to me. I’m definitely going a very different way for my own Jasper project and I’ll talk about that as well.

- Configuration – I think this is an area where Core is a huge improvement on .Net Classic and the clumsy old System.Configuration namespace. I’m a big fan of strong typed configuration and I’m happy to see the ASP.Net team embrace this idea. I think that the IOptions model is a little bit clumsy, but it’s easy to bypass altogether, so it’s not really much of a problem.

- Framework Configuration and “Composeability” – I’m not sold yet on ASP.Net Core’s facilities for configuring middleware, hosting, and service registrations. I think the mechanics are clumsy and will limit their ability to support more advanced modularity and extensibility use cases — but ask me about that again in a couple weeks when I’ve worked with it much more. My colleagues are probably getting sick to death of me slipping in comments at work to the effect of “FubuMVC handled that much better.”

- Authoring HTTP Endpoints – A fast way to divide developers into opposing groups is to ask them their opinion about “convention over configuration” techniques versus wanting everything to be explicit to avoid “magic.” I’m in the camp that stresses clean code and seeks to eliminate repetitive cruft code from frameworks by utilizing conventions, but the official ASP.Net tooling (and the majority of the .Net developer community) falls into the “magic bad, explicit code good” camp. So far, I think that controller code in ASP.Net Core MVC applications is butt ugly (I disliked the original MVC for this very reason too).

- Accessing and Manipulating HTTP requests – On the positive side, I think that ASP.Net Core’s RequestDelegate signature is much easier for the average developer (and me) to use than the older OWIN “mystery meat” API. On the flip side, I think the HttpContext class is a blob class and I’m not yet buying into the “Feature” model behind it.

- The Runtime Pipeline (how an HTTP request is processed) – I think they did some smart things here, but based on similar technical decisions in FubuMVC, I’d be concerned about performance and unnecessary memory allocations

- IoC Integration – I think that what they did for IoC integration into ASP.Net Core is going to be problematic for users and it’s already been a nightmare for me with StructureMap. Ironically, I’m going the other way around and working hard to dramatically reduce the role of an IoC container in Jasper’s internals based on our experience with FubuMVC.

- Tag Helpers? I honestly think we had a stronger model in HtmlTags and the html conventions in FubuMVC, but regardless, I don’t think this technique is going to be all that important as web application front end’s continue to move to Javascript-heavy clients. It still might be interesting to consider how to support conventional approaches without confusing the heck out of your users

- Razor? I don’t know that I care about server side rendering this time around. Right now our thinking is to try to use either HTTP/2 push so it’s no big deal to request a static HTML page with an initial Json payload for our React/Redux applications. If we ever decide we really need to build an isomorphic application, I think I’d vote to just use Node.js for that.

I’m happy to take any requests if there’s something you’d want to see me write about — or feel free to tell me to just go away;)