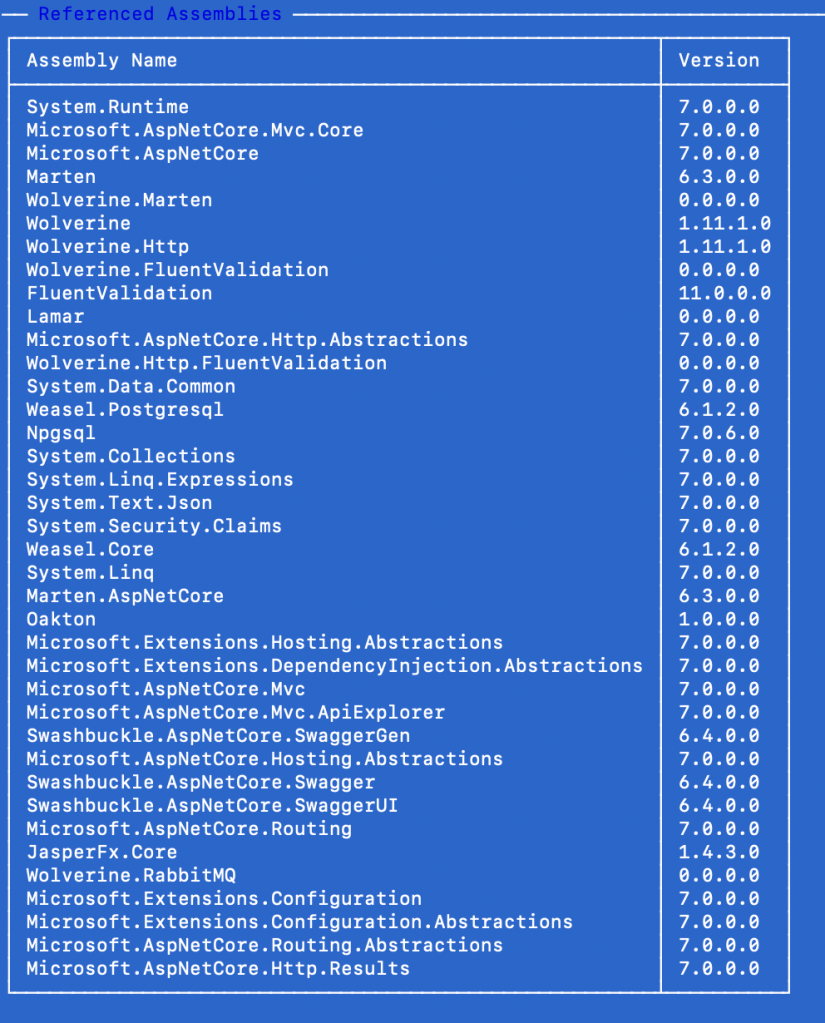

Hey, did you know that JasperFx Software is ready for formal support plans for Marten and Wolverine? Not only are we trying to make the “Critter Stack” tools be viable long term options for your shop, we’re also interested in hearing your opinions about the tools and how they should change. We’re also certainly open to help you succeed with your software development projects on a consulting basis whether you’re using any part of the Critter Stack or any other .NET server side tooling.

Let’s build a small web service application using the whole “Critter Stack” and their friends, one small step at a time. For right now, the “finished” code is at CritterStackHelpDesk on GitHub.

The posts in this series are:

- Event Storming

- Marten as Event Store

- Marten Projections

- Integrating Marten into Our Application

- Wolverine as Mediator

- Web Service Query Endpoints with Marten

- Dealing with Concurrency

- Wolverine’s Aggregate Handler Workflow FTW!

- Command Line Diagnostics with Oakton

- Integration Testing Harness

- Marten as Document Database

- Asynchronous Processing with Wolverine

- Durable Outbox Messaging and Why You Care!

- Wolverine HTTP Endpoints

- Easy Unit Testing with Pure Functions

- Vertical Slice Architecture

- Messaging with Rabbit MQ

- The “Stateful Resource” Model (this post)

- Resiliency

I’ve personally spent quite a bit of time helping teams and organizations deal with older, legacy codebases where it might easily take a couple days of working painstakingly through the instructions in a large Wiki page of some sort in order to make their codebase work on a local development environment. That’s indicative of a high friction environment, and definitely not what we’d ideally like to have for our own teams.

Thinking about the external dependencies of our incident tracking, help desk api we’ve utilized:

- Marten for persistence, which requires our system to need PostgreSQL database schema objects

- Wolverine’s PostgreSQL-backed transactional outbox support, which also requires its own set of PostgreSQL database schema objects

- Rabbit MQ for asynchronous messaging, which requires queues, exchanges, and bindings to be set up in our message broker for the application to work

That’s a bit of stuff that needs to be configured within the Rabbit MQ or PostgreSQL infrastructure around our service in order to run our integration tests or the application itself for local testing.

Instead of the error prone, painstaking manual set up laboriously laid out in a Wiki page somewhere where you can’t remember where it is, let’s leverage the Critter Stack’s “Stateful Resource” model to quickly set our system up ready to run in development.

Building on our existing application configuration, I’m going to add a couple more lines of code to our system’s Program file:

// Depending on your DevOps setup and policies,

// you may or may not actually want this enabled

// in production installations, but some folks do

if (builder.Environment.IsDevelopment())

{

// This will direct our application to set up

// all known "stateful resources" at application bootstrapping

// time

builder.Services.AddResourceSetupOnStartup();

}

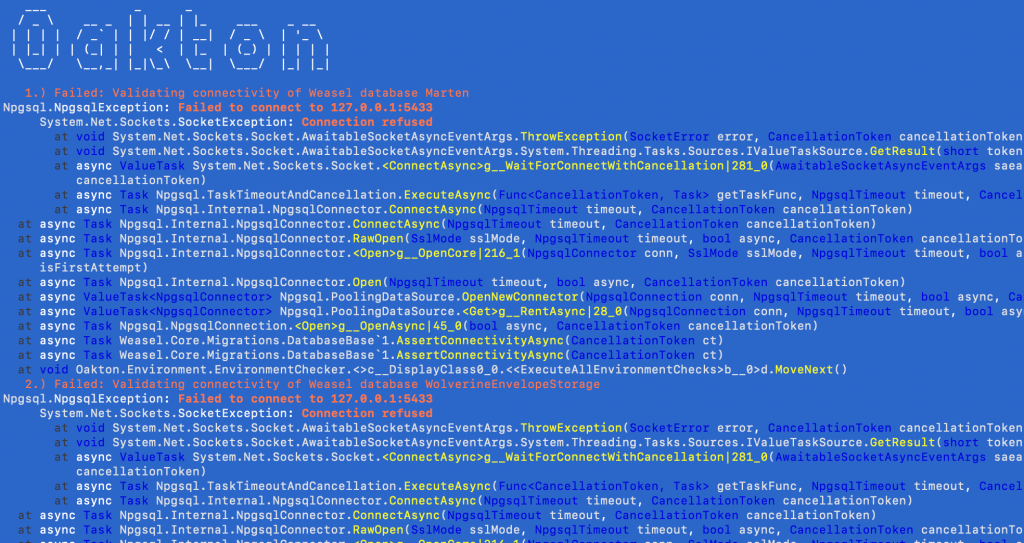

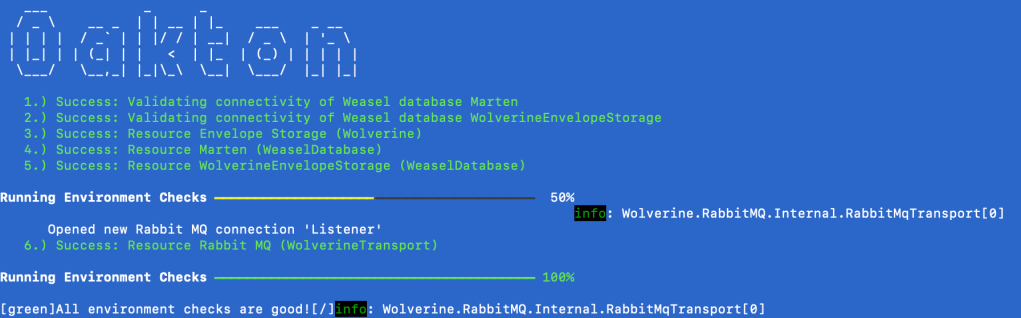

And that’s that. If you’re using the integration test harness like we did in an earlier post, or just starting up the application normally, the application will check for the existence of any of the following, and try to build out anything that’s missing from:

- The known Marten document tables and all the database objects to support Marten’s event sourcing

- The necessary tables and functions for Wolverine’s transactional inbox, outbox, and scheduled message tables (I’ll add a post later on those)

- The known Rabbit MQ exchanges, queues, and bindings

Your application will have to have administrative privileges over all the resources for any of this to work of course, but you would have that at development time at least.

With this capability in place, the procedure for a new developer getting started with our codebase is to:

- Does a clean

git cloneof our codebase on to his local box - Runs

docker compose upto start up all the necessary infrastructure they need to run the system or the system’s integration tests locally - Just run the integration tests or start the system and go!

Easy-peasy.

But wait, there’s more! Assuming you have Oakton set up as your command line like we did in an earlier post, you’ve got some command line tooling that can help as well.

If you omit the call to builder.Services.AddResourceSetupOnStartup();, you could still go to the command line and use this command just once to set everything up:

dotnet run -- resources setup

To check on the status of any or all of the resources, you can use:

dotnet run -- resources check

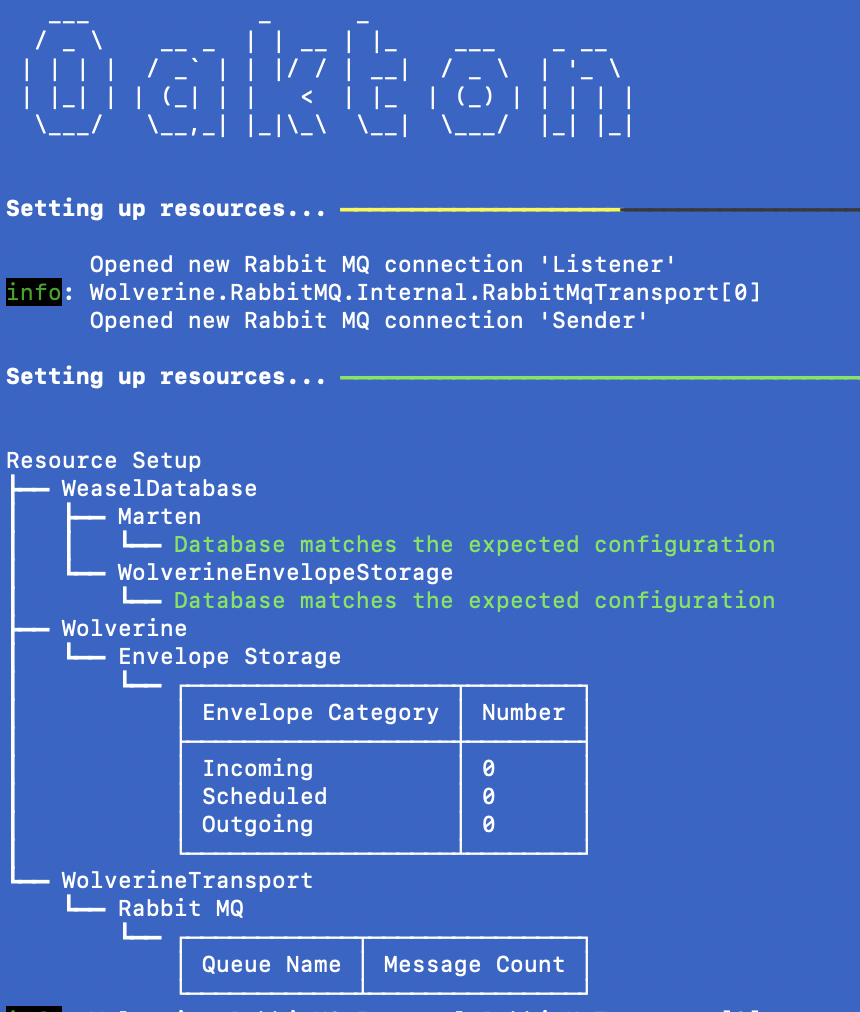

which for the HelpDesk.API, gives you this:

If you want to tear down all the existing data — and at least attempt to purge any Rabbit MQ queues of all messages — you can use:

dotnet run -- resources clear

There’s a few other options you can read about in the Oakton documentation for the Stateful Resource model, but for right now, type dotnet run -- help resources and you can see Oakton’s built in help for the resources command that runs down the supported usage:

Summary and What’s Next

The Critter Stack is trying really hard to create a productive, low friction development ecosystem for your projects. One of the ways it tries to make that happen is by being able to set up infrastructural dependencies automatically at runtime so a developer and just “clone n’ go” without the excruciating pain of the multi-page Wiki getting started instructions so painfully common in legacy codebases.

This stateful resource model is also supported for Kafka transport (which is also local development friendly) and the cloud native Azure Service Bus transport and AWS SQS transport (Wolverine + AWS SQS does work with LocalStack just fine). In the cloud native cases, the credentials from the Wolverine application will have to have the necessary rights to create queues, topics, and subscriptions. In the case of the cloud native transports, there is an option to prefix all the names of the queues, topics, and subscriptions to still create an isolated environment per developer for a better local development story even when relying on cloud native technologies.

I think I’ll add another post to this series where I switch the messaging to one of the cloud native approaches.

As for what’s next in this increasingly long series, I think we still have logging, open telemetry and metrics, resiliency, and maybe a post on Wolverine’s middleware support. That list is somewhat driven by recency bias around questions I’ve been asked here or there about Wolverine.